commit

bd97ece857

60 changed files with 4289 additions and 0 deletions

+ 297

- 0

README.md

View File

| @@ -0,0 +1,297 @@ | |||

| # WES-germline Small Variants Quality Control Pipeline(Start from FASTQ files) | |||

| > Author: Run Luyao | |||

| > | |||

| > E-mail:18110700050@fudan.edu.cn | |||

| > | |||

| > Git: http://choppy.3steps.cn/renluyao/quartet-wes-germline-data-generation-qc.git | |||

| > | |||

| > Last Updates: 2021/04/14 | |||

| ## 安装指南 | |||

| ``` | |||

| # 激活choppy环境 | |||

| open-choppy-env | |||

| # 安装app | |||

| choppy install renluyao/quartet-wes-germline-data-generation-qc | |||

| ``` | |||

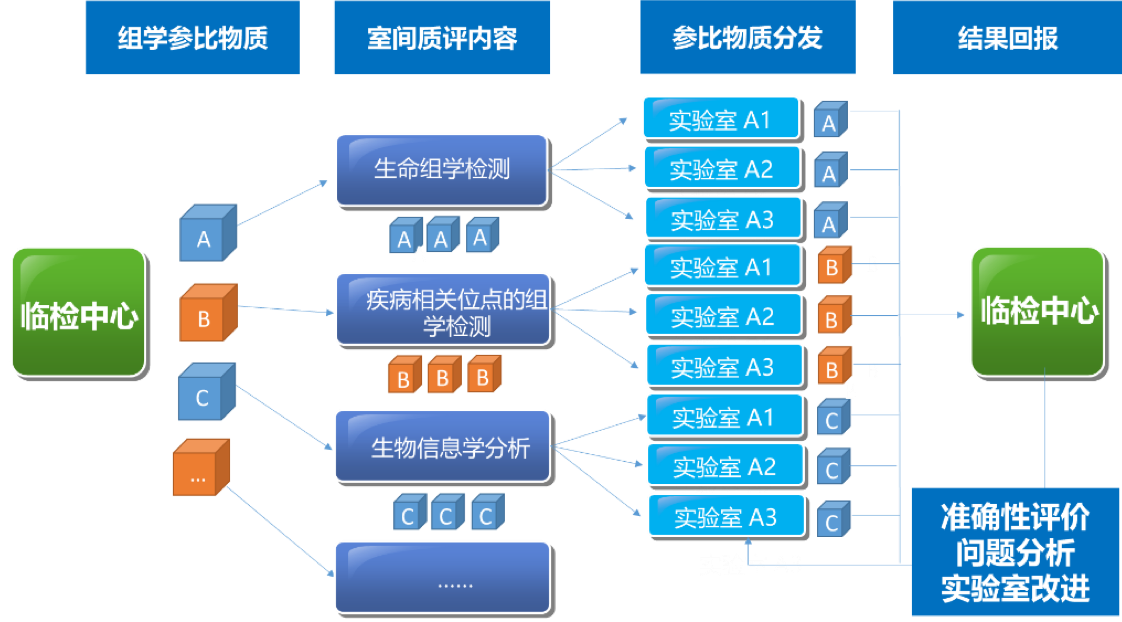

| ## App概述——中华家系1号标准物质介绍 | |||

| 建立高通量全基因组测序的生物计量和质量控制关键技术体系,是保障测序数据跨技术平台、跨实验室可比较、相关研究结果可重复、数据可共享的重要关键共性技术。建立国家基因组标准物质和基准数据集,突破基因组学的生物计量技术,是将测序技术转化成临床应用的重要环节与必经之路,目前国际上尚属空白。中国计量科学研究院与复旦大学、复旦大学泰州健康科学研究院共同研制了人源中华家系1号基因组标准物质(**Quartet,一套4个样本,编号分别为LCL5,LCL6,LCL7,LCL8,其中LCL5和LCL6为同卵双胞胎女儿,LCL7为父亲,LCL8为母亲**),以及相应的全基因组测序序列基准数据集(“量值”),为衡量基因序列检测准确与否提供一把“标尺”,成为保障基因测序数据可靠性的国家基准。人源中华家系1号基因组标准物质来源于泰州队列同卵双生双胞胎家庭,从遗传结构上体现了我国南北交界的人群结构特征,同时家系的设计也为“量值”的确定提供了遗传学依据。 | |||

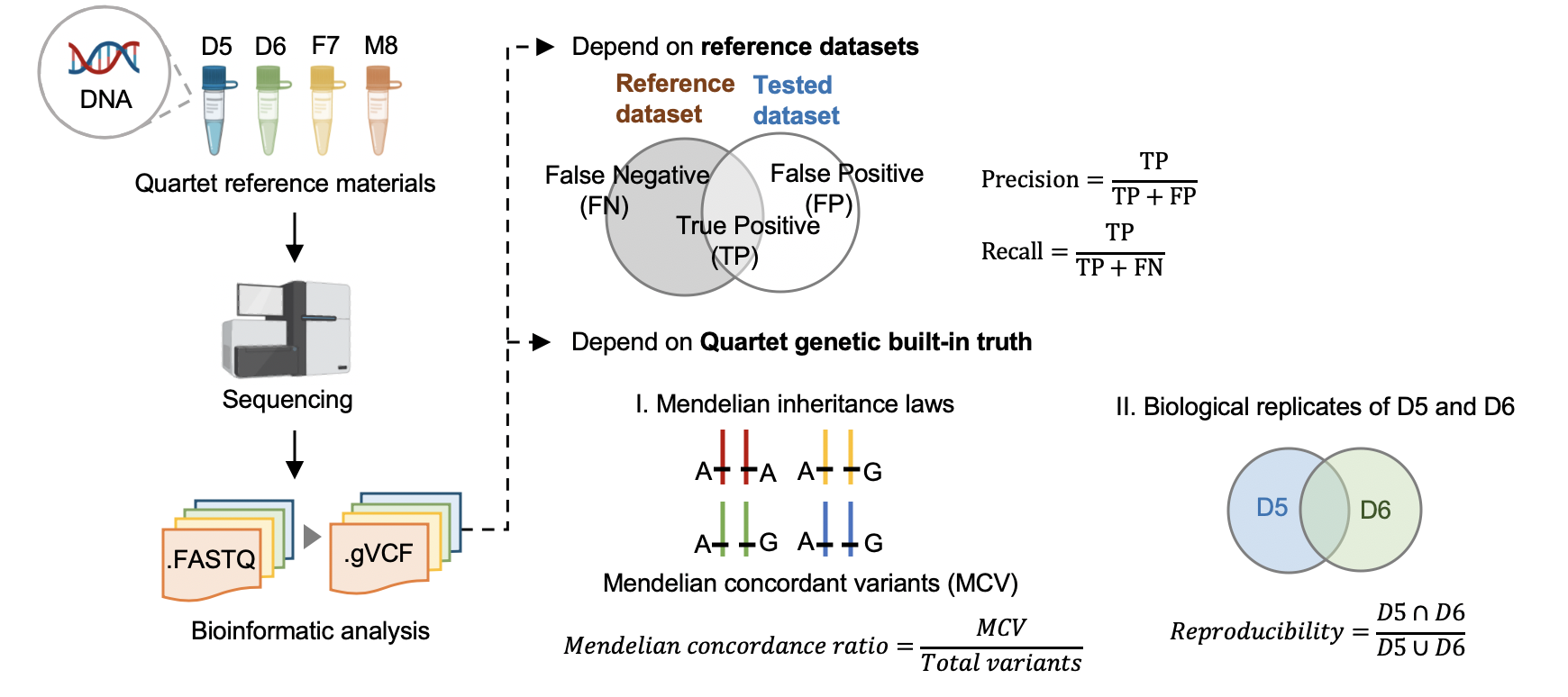

| 中华家系1号DNA标准物质的Small Variants标称值包括高置信单核苷酸变异信息、高置信短插入缺失变异信息和高置信参考基因组区。该系列标准物质可以用于评估基因组测序的性能,包括全基因组测序、全外显子测序、靶向测序,如基因捕获测序;还可用于评估测序过程和数据分析过程中对SNV和InDel检出的真阳性、假阳性、真阴性和假阴性水平,为基因组测序技术平台、实验室、相关产品的质量控制与性能验证提供标准物质和标准数据。此外,我们还可根据中华家系1号的生物遗传关系计算同卵双胞胎检测突变的一致性和符合四口之家遗传规律的一致率估计测序错误的比例,评估数据产生和分析的质量好坏。 | |||

| 2021年5-7月在临检中心的领导下组织全国临床和科研实验室全外显子测序室间质量评价预研https://www.nccl.org.cn/showEqaPtDetail?id=1514 | |||

|  | |||

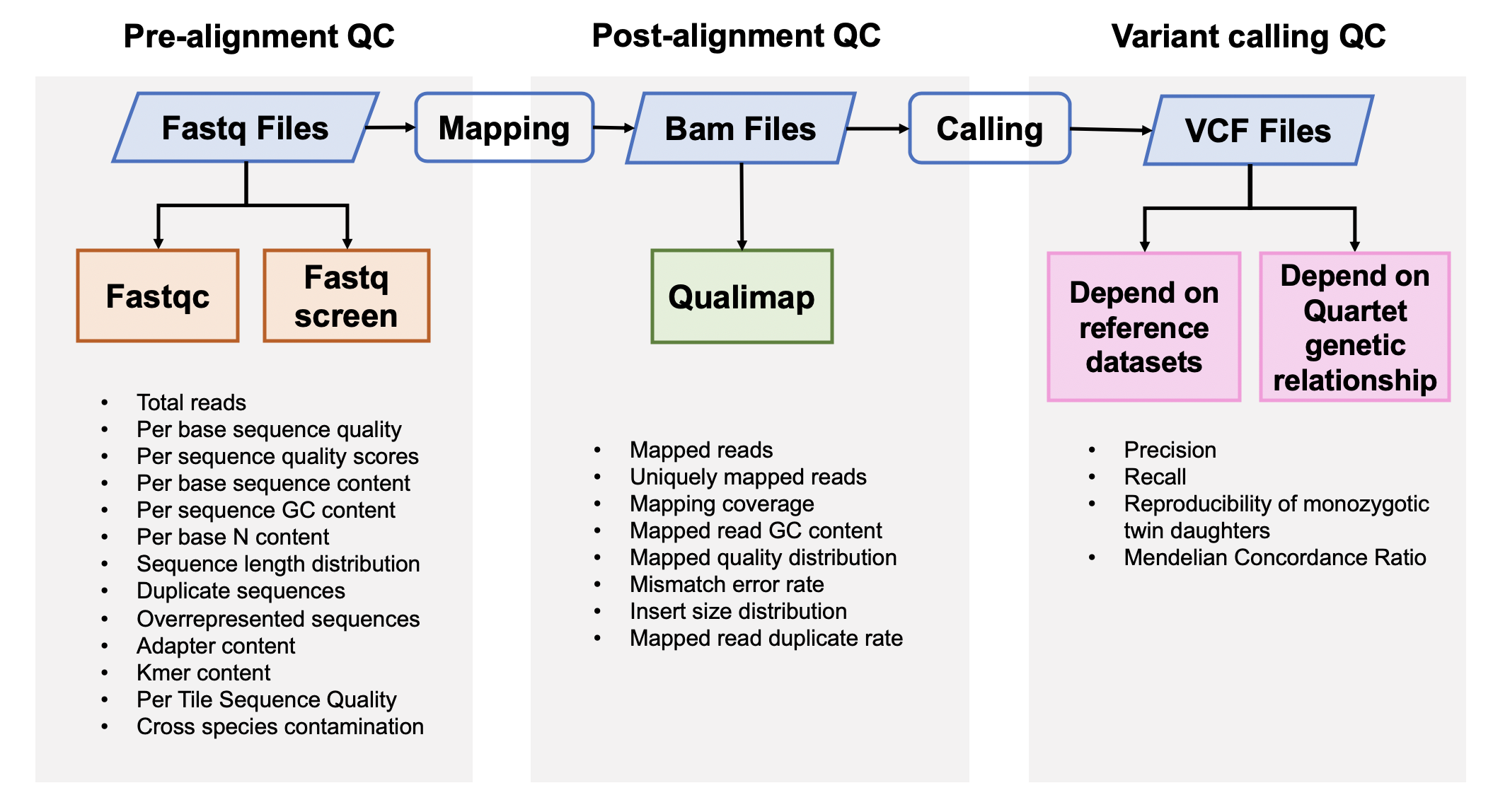

| 该Quality_control APP用于全基因组测序(whole-exome sequencing,WES)数据的质量评估,包括原始数据质控、比对数据质控和突变检出数据质控。 | |||

| ## 流程与参数 | |||

|  | |||

|  | |||

| ### 1. 原始数据质量控制 | |||

| #### [Fastqc](<https://www.bioinformatics.babraham.ac.uk/projects/fastqc/>) v0.11.5 | |||

| FastQC是一个常用的测序原始数据的质控软件,主要包括12个模块,具体请参考[Fastqc模块详情](<https://www.bioinformatics.babraham.ac.uk/projects/fastqc/Help/3%20Analysis%20Modules/>)。 | |||

| ```bash | |||

| fastqc -t <threads> -o <output_directory> <fastq_file> | |||

| ``` | |||

| #### [Fastq Screen](<https://www.bioinformatics.babraham.ac.uk/projects/fastq_screen/>) 0.12.0 | |||

| Fastq Screen是检测测序原始数据中是否引⼊入其他物种,或是接头引物等污染,⽐比如,如果测序样本 | |||

| 是⼈人类,我们期望99%以上的reads匹配到⼈人类基因组,10%左右的reads匹配到与⼈人类基因组同源性 | |||

| 较⾼高的⼩小⿏鼠上。如果有过多的reads匹配到Ecoli或者Yeast,要考虑是否在培养细胞的时候细胞系被污 | |||

| 染,或者建库时⽂文库被污染。 | |||

| ```bash | |||

| fastq_screen --aligner <aligner> --conf <config_file> --top <number_of_reads> --threads <threads> <fastq_file> | |||

| ``` | |||

| `--conf` conifg 文件主要输入了多个物种的fasta文件地址,可根据自己自己的需求下载其他物种的fasta文件加入分析 | |||

| `--top`一般不需要对整个fastq文件进行检索,取前100000行 | |||

| ### 2. 比对后数据质量控制 | |||

| #### [Qualimap](<http://qualimap.bioinfo.cipf.es/>) 2.0.0 | |||

| Qualimap是一个比对指控软件,包含Picard的MarkDuplicates的结果和sentieon中metrics的质控结果。 | |||

| ```bash | |||

| qualimap bamqc -bam <bam_file> -outformat PDF:HTML -nt <threads> -outdir <output_directory> --java-mem-size=32G | |||

| ``` | |||

| ### 3. 突变检出数据质量控制 | |||

| 突变质量控制的流程如下 | |||

|  | |||

| #### 3.1 根据标准数据集的数据质量控制 | |||

| #### [Hap.py](<https://github.com/Illumina/hap.py>) v0.3.9 | |||

| hap.py是将被检测vcf结果与benchmarking对比,计算precision和recall的软件,它考虑了vcf中[突变表示形式的多样性](<https://genome.sph.umich.edu/wiki/Variant_Normalization>),进行了归一化。 | |||

| ```bash | |||

| hap.py <truth_vcf> <query_vcf> -f <bed_file> --threads <threads> -o <output_filename> | |||

| ``` | |||

| #### 3.2 根据Quartet四口之家遗传规律的质量控制 | |||

| #### Reproducibility (in-house python script) | |||

| 标准数据集是根据我们整合多个平台方法,过滤不可重复检测、不符合孟德尔遗传规律的假阳性的突变。它可以评估数据产生和分析方法的相对好坏,但是具有一定的局限性,因为它排除掉了很多难测的基因组区域。我们可以通过比较同卵双胞胎突变检测的一致性对全基因组范围进行评估。 | |||

| #### [Mendelian Concordance Ratio](https://github.com/sbg/VBT-TrioAnalysis) (vbt v1.1) | |||

| 我们首先将四口之家拆分成两个三口之家进行孟德尔遗传的分析。当一个突变符合姐妹一致,且与父母符合孟德尔遗传规律,则认为是符合Quartet四口之家的孟德尔遗传规律。孟德尔符合率是指四个标准检测出的所有突变中满足孟德尔遗传规律的比例。 | |||

| ```bash | |||

| vbt mendelian -ref <fasta_file> -mother <family_merged_vcf> -father <family_merged_vcf> -child <family_merged_vcf> -pedigree <ped_file> -outDir <output_directory> -out-prefix <output_directory_prefix> --output-violation-regions -thread-count <threads> | |||

| ``` | |||

| ## App输入文件 | |||

| ```bash | |||

| choppy samples renluyao/quartet-wes-germline-data-generation-qc-latest --output samples | |||

| ``` | |||

| ####Samples文件的输入包括 | |||

| **1. inputSamplesFile**,该文件的上传至阿里云,samples文件中填写该文件的阿里云地址 | |||

| 请查看示例 **inputSamples.Examples.txt** | |||

| ```bash | |||

| #read1 #read2 #sample_name | |||

| ``` | |||

| read1 是阿里云上fastq read1的地址 | |||

| read2 是阿里云上fastq read2的地址 | |||

| sample_name是指样本的命名 | |||

| 所有上传的文件应有规范的命名 | |||

| Quartet_DNA_SequenceTech_SequenceMachine_SequenceSite_Sample_Replicate_Date.R1/R2.fastq.gz | |||

| SequenceTech是指测序平台,如ILM、BGI等 | |||

| SequenceMachine是指测序仪器,如XTen、Nova、Hiseq(Illumina)、SEQ500、SEQ1000(BGI)等 | |||

| SequenceSite是指测序单位的英文缩写 | |||

| Sample是指LCL5、LCL6、LCL7、LCL8 | |||

| Replicate是指技术重复,从1开始依次增加 | |||

| Date是指数据获得日期,格式为20200710 | |||

| 后缀一定是R1/R2.fastq.gz,不可以随意更改,R1/R2不可以写成r1/r2,fastq.gz不可以写成fq.gz | |||

| 各个缩写规范请见 https://fudan-pgx.yuque.com/docs/share/5baa851b-da97-47b9-b6c4-78f2b60595ab?# 《数据命名规范》 | |||

| **2. project** | |||

| 这个项目的名称,可以写自己可以识别的字符串,只能写英文和数字,不可以写中文 | |||

| **3. bed** | |||

| WES的捕获区域,建库试剂盒对应的BED文件 | |||

| **samples文件的示例请查看choppy_samples_example.csv** | |||

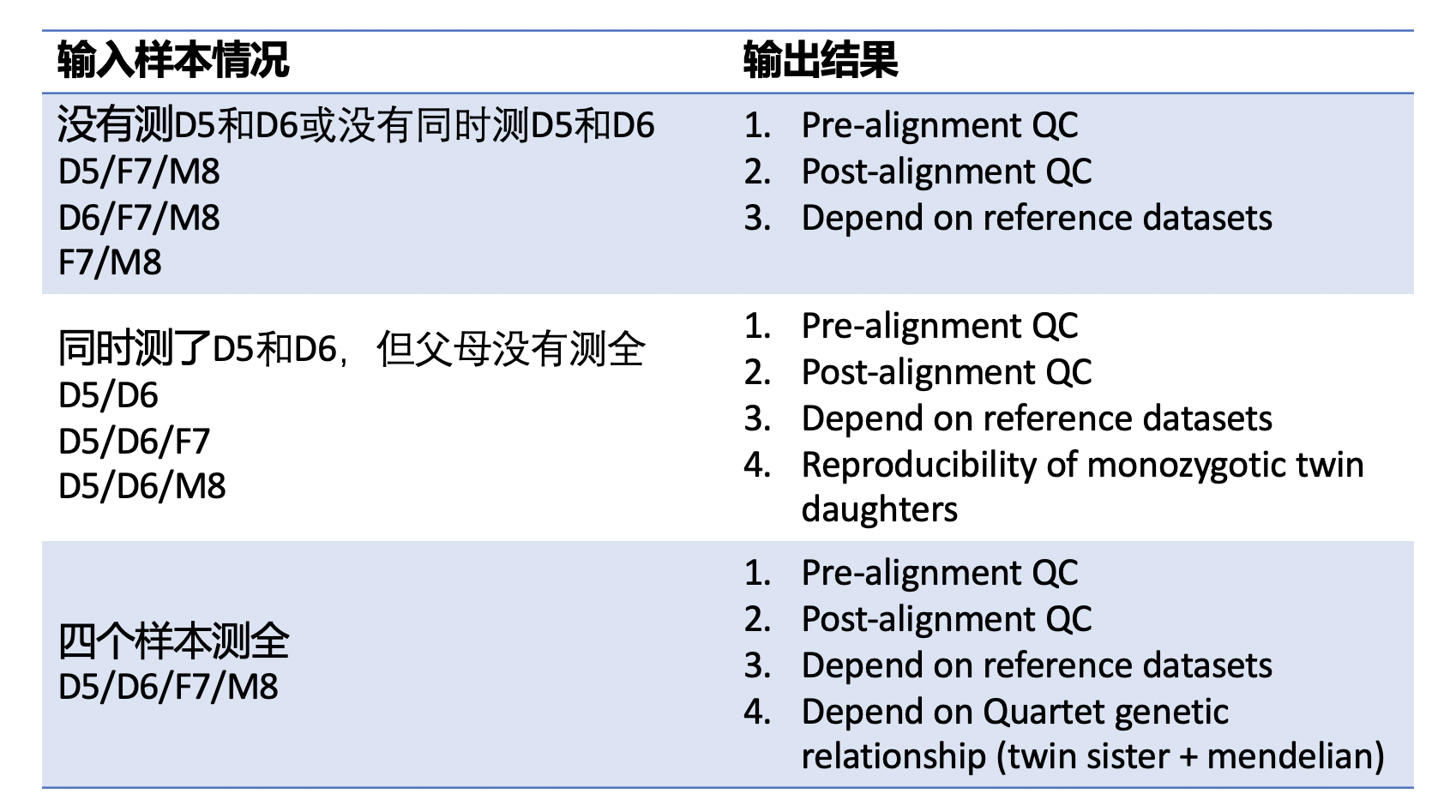

| #### Quartet样本的组合问题 | |||

| ##### 1. 没有测LCL5和LCL6,或者没有同时测LCL5和LCL6 | |||

| 只给出原始数据质控、比对数据质控、与标准集的比较 | |||

| ##### 2. 包含LCL5和LCL6同卵双胞胎的数据,但是父母的数据不全 | |||

| 只给出原始数据质控、比对数据质控、与标准集的比较、同卵双胞胎一致性 | |||

| ##### 3. 四个quartet样本都测了 | |||

| 给出所有结果原始数据质控、比对数据质控、与标准集的比较、同卵双胞胎一致性,符合孟德尔遗传比例 | |||

| **注意**:本app假设每个批次测的技术重复都一样,如batch 1测了LCL5、LCL6、LCL7和LCL8,batch 2 和batch 3也都测了这四个样本。本app不解决特别复杂的问题,例如batch1测了LCL5,LCL6,batch2测了LCL7和LCL8,本app只能给出原始数据质控、比对数据质控、与标准集的比较,不会把多个批次的数据合并计算孟德尔符合率和姐妹一致性。 | |||

| ## App输出文件 | |||

| 本计算会产生大量的中间结果,这里说明最后整合好的结果文件。两个tasks输出最终的结果: | |||

| #### 1. extract_tables.wdl | |||

| 原始结果质控 pre_alignment.txt | |||

| 比对结果指控 post_alignment.txt | |||

| 突变检出指控 variants.calling.qc.txt | |||

| 如果用户输入4个一组完整的家系样本则可以得到每个家庭单位的precision和recall的平均值,用于报告第一页的展示: | |||

| reference_datasets_aver-std.txt | |||

| #### 2. quartet_mendelian.wdl | |||

| 基于Quartet家系的质控 mendelian.txt | |||

| Quartet家系结果的平均值和SD值,用于报告第一页的展示 | |||

| quartet_indel_aver-std.txt | |||

| quartet_snv_aver-std.txt | |||

| #### 3. D5_D6.WDL | |||

| 如果用户没有完整输入一组家庭,但有同时有D5和D6的信息,我们可以计算同卵双胞胎检测出的突变一致性,但是这部分输出暂不整合至报告中。 | |||

| ${project}.sister.txt | |||

| ## 结果展示与解读 | |||

| ####1. 原始数据质量控制 | |||

| 原始数据质量控制主要通过考察测序数据的基本特征判断数据质量的好坏,比如数据量是否达到要求、reads的重复率是否过多、碱基质量、ATGC四种碱基的分布、GC含量、接头序列含量以及是否有其他物种的污染等等。 | |||

| FastQC和FastqScreen是两个常用的原始数据质量控制软件 | |||

| 总结表格 **pre_alignment.txt** | |||

| | 列名 | 说明 | | |||

| | ------------------------- | ------------------------------------ | | |||

| | Sample | 样本名,R1结尾为read1,R2结尾为read2 | | |||

| | %Dup | % Duplicate reads | | |||

| | %GC | Average % GC content | | |||

| | Total Sequences (million) | Total sequences | | |||

| | %Human | 比对到人类基因组的比例 | | |||

| | %EColi | 比对到大肠杆菌基因组的比例 | | |||

| | %Adapter | 比对到接头序列的比例 | | |||

| | %Vector | 比对到载体基因组的比例 | | |||

| | %rRNA | 比对到rRNA序列的比例 | | |||

| | %Virus | 比对到病毒基因组的比例 | | |||

| | %Yeast | 比对到酵母基因组的比例 | | |||

| | %Mitoch | 比对到线粒体序列的比例 | | |||

| | %No hits | 没有比对到以上基因组的比例 | | |||

| #### 2. 比对后数据质量控制 | |||

| 总结表格**post_alignment.txt** | |||

| | 列名 | 说明 | | |||

| | --------------------- | --------------------------------------------- | | |||

| | Sample | 样本名 | | |||

| | %Mapping | % mapped reads | | |||

| | %Mismatch Rate | Mapping error rate | | |||

| | Mendelian Insert Size | Median insert size(bp) | | |||

| | %Q20 | % bases >Q20 | | |||

| | %Q30 | % bases >Q30 | | |||

| | Mean Coverage | Mean deduped coverage | | |||

| | Median Coverage | Median deduped coverage | | |||

| | PCT_1X | Fraction of genome with at least 1x coverage | | |||

| | PCT_5X | Fraction of genome with at least 5x coverage | | |||

| | PCT_10X | Fraction of genome with at least 10x coverage | | |||

| | PCT_30X | Fraction of genome with at least 30x coverage | | |||

| ####3. 突变检出数据质量控制 | |||

| 具体信息**variants.calling.qc.txt** | |||

| | 列名 | 说明 | | |||

| | --------------- | ------------------------------ | | |||

| | Sample | 样本名 | | |||

| | SNV number | 检测到SNV的数目 | | |||

| | INDEL number | 检测到INDEL的数目 | | |||

| | SNV query | 在高置信基因组区域中的SNV数目 | | |||

| | INDEL query | 在高置信基因组区域中INDEL数目 | | |||

| | SNV TP | 真阳性SNV | | |||

| | INDEL TP | 真阳性INDEL | | |||

| | SNV FP | 假阳性SNV | | |||

| | INDEL FP | 假阳性INDEL | | |||

| | SNV FN | 假阴性SNV | | |||

| | INDEL FN | 假阴性INDEL | | |||

| | SNV precision | SNV与标准集比较的precision | | |||

| | INDEL precision | INDEL的与标准集比较的precision | | |||

| | SNV recall | SNV与标准集比较的recall | | |||

| | INDEL recall | INDEL的与标准集比较的recall | | |||

| | SNV F1 | SNV与标准集比较的F1-score | | |||

| | INDEL F1 | INDEL与标准集比较的F1-score | | |||

| 与标准集比较的家庭单元整合结果**reference_datasets_aver-std.txt** | |||

| | | Mean | SD | | |||

| | --------------- | ---- | ---- | | |||

| | SNV precision | | | | |||

| | INDEL precision | | | | |||

| | SNV recall | | | | |||

| | INDEL recall | | | | |||

| | SNV F1 | | | | |||

| | INDEL F1 | | | | |||

| ####4 Quartet家系关系评估mendelian.txt | |||

| | 列名 | 说明 | | |||

| | ----------------------------- | ------------------------------------------------------------ | | |||

| | Family | 家庭名字,我们目前的设计是4个Quartet样本,每个三个技术重复,family_1是指rep1的4个样本组成的家庭单位,以此类推。 | | |||

| | Total_Variants | 四个Quartet样本一共能检测到的变异位点数目 | | |||

| | Mendelian_Concordant_Variants | 符合孟德尔规律的变异位点数目 | | |||

| | Mendelian_Concordance_Quartet | 符合孟德尔遗传的比例 | | |||

| 家系结果的整合结果**quartet_indel_aver-std.txt**和**quartet_snv_aver-std.txt** | |||

| | | Mean | SD | | |||

| | --------------------------- | ---- | ---- | | |||

| | SNV/INDEL(根据文件名判断) | | | | |||

+ 2

- 0

choppy_samples_example.csv

View File

| @@ -0,0 +1,2 @@ | |||

| sample_id,inputSamplesFile,project,bed | |||

| 1,oss://pgx-result/renluyao/dataportal.test.small.txt,Quartet_DNA_ILM_XTen_NVG_20170531,oss://pgx-reference-data/bed/cbcga/S07604514_Padded.bed | |||

BIN

codescripts/.DS_Store

View File

+ 90

- 0

codescripts/D5_D6.py

View File

| @@ -0,0 +1,90 @@ | |||

| from __future__ import division | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to calculate reproducibility between Quartet_D5 and Quartet_D6s") | |||

| parser.add_argument('-sister', '--sister', type=str, help='sister.txt', required=True) | |||

| parser.add_argument('-project', '--project', type=str, help='project name', required=True) | |||

| args = parser.parse_args() | |||

| sister_file = args.sister | |||

| project_name = args.project | |||

| # output file | |||

| output_name = project_name + '.sister.reproducibility.txt' | |||

| output_file = open(output_name,'w') | |||

| # input files | |||

| sister_dat = pd.read_table(sister_file) | |||

| indel_sister_same = 0 | |||

| indel_sister_diff = 0 | |||

| snv_sister_same = 0 | |||

| snv_sister_diff = 0 | |||

| for row in sister_dat.itertuples(): | |||

| # snv indel | |||

| if ',' in row[4]: | |||

| alt = row[4].split(',') | |||

| alt_len = [len(i) for i in alt] | |||

| alt_max = max(alt_len) | |||

| else: | |||

| alt_max = len(row[4]) | |||

| alt = alt_max | |||

| ref = row[3] | |||

| if len(ref) == 1 and alt == 1: | |||

| cate = 'SNV' | |||

| elif len(ref) > alt: | |||

| cate = 'INDEL' | |||

| elif alt > len(ref): | |||

| cate = 'INDEL' | |||

| elif len(ref) == alt: | |||

| cate = 'INDEL' | |||

| # sister | |||

| if row[5] == row[6]: | |||

| if row[5] == './.': | |||

| mendelian = 'noInfo' | |||

| sister_count = "no" | |||

| elif row[5] == '0/0': | |||

| mendelian = 'Ref' | |||

| sister_count = "no" | |||

| else: | |||

| mendelian = '1' | |||

| sister_count = "yes_same" | |||

| else: | |||

| mendelian = '0' | |||

| if (row[5] == './.' or row[5] == '0/0') and (row[6] == './.' or row[6] == '0/0'): | |||

| sister_count = "no" | |||

| else: | |||

| sister_count = "yes_diff" | |||

| if cate == 'SNV': | |||

| if sister_count == 'yes_same': | |||

| snv_sister_same += 1 | |||

| elif sister_count == 'yes_diff': | |||

| snv_sister_diff += 1 | |||

| else: | |||

| pass | |||

| elif cate == 'INDEL': | |||

| if sister_count == 'yes_same': | |||

| indel_sister_same += 1 | |||

| elif sister_count == 'yes_diff': | |||

| indel_sister_diff += 1 | |||

| else: | |||

| pass | |||

| indel_sister = indel_sister_same/(indel_sister_same + indel_sister_diff) | |||

| snv_sister = snv_sister_same/(snv_sister_same + snv_sister_diff) | |||

| outcolumn = 'Project\tReproducibility_D5_D6\n' | |||

| indel_outResult = project_name + '.INDEL' + '\t' + str(indel_sister) + '\n' | |||

| snv_outResult = project_name + '.SNV' + '\t' + str(snv_sister) + '\n' | |||

| output_file.write(outcolumn) | |||

| output_file.write(indel_outResult) | |||

| output_file.write(snv_outResult) | |||

+ 37

- 0

codescripts/Indel_bed.py

View File

| @@ -0,0 +1,37 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| mut = pd.read_table('/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/MIE/vcf/mutation_type',header=None) | |||

| outIndel = open(sys.argv[1],'w') | |||

| for row in mut.itertuples(): | |||

| if ',' in row._4: | |||

| alt_seq = row._4.split(',') | |||

| alt_len = [len(i) for i in alt_seq] | |||

| alt = max(alt_len) | |||

| else: | |||

| alt = len(row._4) | |||

| ref = row._3 | |||

| pos = row._2 | |||

| if len(ref) == 1 and alt == 1: | |||

| pass | |||

| elif len(ref) > alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (len(ref) - 1) | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif alt > len(ref): | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif len(ref) == alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

+ 72

- 0

codescripts/bed_region.py

View File

| @@ -0,0 +1,72 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| mut = mut = pd.read_table('/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/vcf/mutation_type',header=None) | |||

| vote = pd.read_table('/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/all_info/benchmark.vote.mendelian.txt',header=None) | |||

| merged_df = pd.merge(vote, mut, how='inner', left_on=[0,1], right_on = [0,1]) | |||

| outFile = open(sys.argv[1],'w') | |||

| outIndel = open(sys.argv[2],'w') | |||

| for row in merged_df.itertuples(): | |||

| #d5 | |||

| if ',' in row._7: | |||

| d5 = row._7.split(',') | |||

| d5_len = [len(i) for i in d5] | |||

| d5_alt = max(d5_len) | |||

| else: | |||

| d5_alt = len(row._7) | |||

| #d6 | |||

| if ',' in row._15: | |||

| d6 = row._15.split(',') | |||

| d6_len = [len(i) for i in d6] | |||

| d6_alt = max(d6_len) | |||

| else: | |||

| d6_alt = len(row._15) | |||

| #f7 | |||

| if ',' in row._23: | |||

| f7 = row._23.split(',') | |||

| f7_len = [len(i) for i in f7] | |||

| f7_alt = max(f7_len) | |||

| else: | |||

| f7_alt = len(row._23) | |||

| #m8 | |||

| if ',' in row._31: | |||

| m8 = row._31.split(',') | |||

| m8_len = [len(i) for i in m8] | |||

| m8_alt = max(m8_len) | |||

| else: | |||

| m8_alt = len(row._31) | |||

| all_length = [d5_alt,d6_alt,f7_alt,m8_alt] | |||

| alt = max(all_length) | |||

| ref = row._35 | |||

| pos = int(row._2) | |||

| if len(ref) == 1 and alt == 1: | |||

| StartPos = int(pos) -1 | |||

| EndPos = int(pos) | |||

| cate = 'SNV' | |||

| elif len(ref) > alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (len(ref) - 1) | |||

| cate = 'INDEL' | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif alt > len(ref): | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif len(ref) == alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| outline = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\t' + str(row._2) + '\t' + cate + '\n' | |||

| outFile.write(outline) | |||

+ 67

- 0

codescripts/cluster.sh

View File

| @@ -0,0 +1,67 @@ | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$7"\t.\t.\t.\tGT\t"$6}' | grep -v '0/0' > LCL5.body | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$15"\t.\t.\t.\tGT\t"$14}' | grep -v '0/0' > LCL6.body | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$23"\t.\t.\t.\tGT\t"$22}' | grep -v '0/0'> LCL7.body | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$31"\t.\t.\t.\tGT\t"$30}'| grep -v '0/0' > LCL8.body | |||

| cat header5 LCL5.body > LCL5.beforediffbed.vcf | |||

| cat header6 LCL6.body > LCL6.beforediffbed.vcf | |||

| cat header7 LCL7.body > LCL7.beforediffbed.vcf | |||

| cat header8 LCL8.body > LCL8.beforediffbed.vcf | |||

| rtg bgzip *beforediffbed.vcf | |||

| rtg index *beforediffbed.vcf.gz | |||

| rtg vcffilter -i LCL5.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL5.afterfilterdiffbed.vcf.gz | |||

| rtg vcffilter -i LCL6.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL6.afterfilterdiffbed.vcf.gz | |||

| rtg vcffilter -i LCL7.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL7.afterfilterdiffbed.vcf.gz | |||

| rtg vcffilter -i LCL8.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL8.afterfilterdiffbed.vcf.gz | |||

| /mnt/pgx_src_data_pool_4/home/renluyao/softwares/annovar/table_annovar.pl LCL5.beforediffbed.vcf.gz /mnt/pgx_src_data_pool_4/home/renluyao/softwares/annovar/humandb \ | |||

| -buildver hg38 \ | |||

| -out LCL5 \ | |||

| -remove \ | |||

| -protocol 1000g2015aug_all,1000g2015aug_afr,1000g2015aug_amr,1000g2015aug_eas,1000g2015aug_eur,1000g2015aug_sas,clinvar_20190305,gnomad211_genome \ | |||

| -operation f,f,f,f,f,f,f,f \ | |||

| -nastring . \ | |||

| -vcfinput \ | |||

| --thread 8 | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL5.afterfilterdiffbed.vcf.gz -o LCL5_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL6.afterfilterdiffbed.vcf.gz -o LCL6_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL7.afterfilterdiffbed.vcf.gz -o LCL7_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL8.afterfilterdiffbed.vcf.gz -o LCL8_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| zcat LCL5.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL6.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL7.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL8.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL5.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL6.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL7.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL8.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL5.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| zcat LCL6.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| zcat LCL7.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| zcat LCL8.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| bedtools subtract -a LCL5.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL5.27.homo_ref.consensus.filtereddiffbed.bed | |||

| bedtools subtract -a LCL6.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL6.27.homo_ref.consensus.filtereddiffbed.bed | |||

| bedtools subtract -a LCL7.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL7.27.homo_ref.consensus.filtereddiffbed.bed | |||

| bedtools subtract -a LCL8.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL8.27.homo_ref.consensus.filtereddiffbed.bed | |||

| python vcf2bed.py LCL5.body LCL5.variants.bed | |||

| python vcf2bed.py LCL6.body LCL6.variants.bed | |||

| python vcf2bed.py LCL7.body LCL7.variants.bed | |||

| python vcf2bed.py LCL8.body LCL8.variants.bed | |||

| cat /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/all_info/LCL5.variants.bed | cut -f1,11,12 | cat - LCL5.27.homo_ref.consensus.filtereddiffbed.bed | sort -k1,1 -k2,2n > LCL5.high.confidence.bed | |||

+ 156

- 0

codescripts/extract_tables.py

View File

| @@ -0,0 +1,156 @@ | |||

| import json | |||

| import pandas as pd | |||

| from functools import reduce | |||

| import sys, argparse, os | |||

| parser = argparse.ArgumentParser(description="This script is to get information from multiqc and sentieon, output the raw fastq, bam and variants calling (precision and recall) quality metrics") | |||

| parser.add_argument('-quality', '--quality_yield', type=str, help='*.quality_yield.txt', required=True) | |||

| parser.add_argument('-depth', '--wgs_metrics', type=str, help='*deduped_WgsMetricsAlgo.txt', required=True) | |||

| parser.add_argument('-aln', '--aln_metrics', type=str, help='*_deduped_aln_metrics.txt', required=True) | |||

| parser.add_argument('-is', '--is_metrics', type=str, help='*_deduped_is_metrics.txt', required=True) | |||

| parser.add_argument('-fastqc', '--fastqc', type=str, help='multiqc_fastqc.txt', required=True) | |||

| parser.add_argument('-fastqscreen', '--fastqscreen', type=str, help='multiqc_fastq_screen.txt', required=True) | |||

| parser.add_argument('-hap', '--happy', type=str, help='multiqc_happy_data.json', required=True) | |||

| parser.add_argument('-project', '--project_name', type=str, help='project_name', required=True) | |||

| args = parser.parse_args() | |||

| # Rename input: | |||

| quality_yield_file = args.quality_yield | |||

| wgs_metrics_file = args.wgs_metrics | |||

| aln_metrics_file = args.aln_metrics | |||

| is_metrics_file = args.is_metrics | |||

| fastqc_file = args.fastqc | |||

| fastqscreen_file = args.fastqscreen | |||

| hap_file = args.happy | |||

| project_name = args.project_name | |||

| ############################################# | |||

| # fastqc | |||

| fastqc = pd.read_table(fastqc_file) | |||

| #fastqc = dat.loc[:, dat.columns.str.startswith('FastQC')] | |||

| #fastqc.insert(loc=0, column='Sample', value=dat['Sample']) | |||

| #fastqc_stat = fastqc.dropna() | |||

| # qulimap | |||

| #qualimap = dat.loc[:, dat.columns.str.startswith('QualiMap')] | |||

| #qualimap.insert(loc=0, column='Sample', value=dat['Sample']) | |||

| #qualimap_stat = qualimap.dropna() | |||

| # fastqc | |||

| #dat = pd.read_table(fastqc_file) | |||

| #fastqc_module = dat.loc[:, "per_base_sequence_quality":"kmer_content"] | |||

| #fastqc_module.insert(loc=0, column='Sample', value=dat['Sample']) | |||

| #fastqc_all = pd.merge(fastqc_stat,fastqc_module, how='outer', left_on=['Sample'], right_on = ['Sample']) | |||

| # fastqscreen | |||

| dat = pd.read_table(fastqscreen_file) | |||

| fastqscreen = dat.loc[:, dat.columns.str.endswith('percentage')] | |||

| dat['Sample'] = [i.replace('_screen','') for i in dat['Sample']] | |||

| fastqscreen.insert(loc=0, column='Sample', value=dat['Sample']) | |||

| # pre-alignment | |||

| pre_alignment_dat = pd.merge(fastqc,fastqscreen,how="outer",left_on=['Sample'],right_on=['Sample']) | |||

| pre_alignment_dat['FastQC_mqc-generalstats-fastqc-total_sequences'] = pre_alignment_dat['FastQC_mqc-generalstats-fastqc-total_sequences']/1000000 | |||

| del pre_alignment_dat['FastQC_mqc-generalstats-fastqc-percent_fails'] | |||

| del pre_alignment_dat['FastQC_mqc-generalstats-fastqc-avg_sequence_length'] | |||

| del pre_alignment_dat['ERCC percentage'] | |||

| del pre_alignment_dat['Phix percentage'] | |||

| del pre_alignment_dat['Mouse percentage'] | |||

| pre_alignment_dat = pre_alignment_dat.round(2) | |||

| pre_alignment_dat.columns = ['Sample','%Dup','%GC','Total Sequences (million)','%Human','%EColi','%Adapter','%Vector','%rRNA','%Virus','%Yeast','%Mitoch','%No hits'] | |||

| pre_alignment_dat.to_csv('pre_alignment.txt',sep="\t",index=0) | |||

| ############################ | |||

| dat = pd.read_table(aln_metrics_file,index_col=False) | |||

| dat['PCT_ALIGNED_READS'] = dat["PF_READS_ALIGNED"]/dat["TOTAL_READS"] | |||

| aln_metrics = dat[["Sample", "PCT_ALIGNED_READS","PF_MISMATCH_RATE"]] | |||

| aln_metrics = aln_metrics * 100 | |||

| aln_metrics['Sample'] = [x[-1] for x in aln_metrics['Sample'].str.split('/')] | |||

| dat = pd.read_table(is_metrics_file,index_col=False) | |||

| is_metrics = dat[['Sample', 'MEDIAN_INSERT_SIZE']] | |||

| is_metrics['Sample'] = [x[-1] for x in is_metrics['Sample'].str.split('/')] | |||

| dat = pd.read_table(quality_yield_file,index_col=False) | |||

| dat['%Q20'] = dat['Q20_BASES']/dat['TOTAL_BASES'] | |||

| dat['%Q30'] = dat['Q30_BASES']/dat['TOTAL_BASES'] | |||

| quality_yield = dat[['Sample','%Q20','%Q30']] | |||

| quality_yield = quality_yield * 100 | |||

| quality_yield['Sample'] = [x[-1] for x in quality_yield['Sample'].str.split('/')] | |||

| dat = pd.read_table(wgs_metrics_file,index_col=False) | |||

| wgs_metrics = dat[['Sample','MEAN_COVERAGE','MEDIAN_COVERAGE','PCT_1X', 'PCT_5X', 'PCT_10X','PCT_30X']] | |||

| wgs_metrics['PCT_1X'] = wgs_metrics['PCT_1X'] * 100 | |||

| wgs_metrics['PCT_5X'] = wgs_metrics['PCT_5X'] * 100 | |||

| wgs_metrics['PCT_10X'] = wgs_metrics['PCT_10X'] * 100 | |||

| wgs_metrics['PCT_30X'] = wgs_metrics['PCT_30X'] * 100 | |||

| wgs_metrics['Sample'] = [x[-1] for x in wgs_metrics['Sample'].str.split('/')] | |||

| data_frames = [aln_metrics, is_metrics, quality_yield, wgs_metrics] | |||

| post_alignment_dat = reduce(lambda left,right: pd.merge(left,right,on=['Sample'],how='outer'), data_frames) | |||

| post_alignment_dat.columns = ['Sample', '%Mapping', '%Mismatch Rate', 'Mendelian Insert Size','%Q20', '%Q30', 'Mean Coverage' ,'Median Coverage', 'PCT_1X', 'PCT_5X', 'PCT_10X','PCT_30X'] | |||

| post_alignment_dat = post_alignment_dat.round(2) | |||

| post_alignment_dat.to_csv('post_alignment.txt',sep="\t",index=0) | |||

| ######################################### | |||

| # variants calling | |||

| with open(hap_file) as hap_json: | |||

| happy = json.load(hap_json) | |||

| dat =pd.DataFrame.from_records(happy) | |||

| dat = dat.loc[:, dat.columns.str.endswith('ALL')] | |||

| dat_transposed = dat.T | |||

| dat_transposed = dat_transposed.loc[:,['sample_id','TRUTH.FN','QUERY.TOTAL','QUERY.FP','QUERY.UNK','METRIC.Precision','METRIC.Recall','METRIC.F1_Score']] | |||

| dat_transposed['QUERY.TP'] = dat_transposed['QUERY.TOTAL'].astype(int) - dat_transposed['QUERY.UNK'].astype(int) - dat_transposed['QUERY.FP'].astype(int) | |||

| dat_transposed['QUERY'] =dat_transposed['QUERY.TOTAL'].astype(int) - dat_transposed['QUERY.UNK'].astype(int) | |||

| indel = dat_transposed[['INDEL' in s for s in dat_transposed.index]] | |||

| snv = dat_transposed[['SNP' in s for s in dat_transposed.index]] | |||

| indel.reset_index(drop=True, inplace=True) | |||

| snv.reset_index(drop=True, inplace=True) | |||

| benchmark = pd.concat([snv, indel], axis=1) | |||

| benchmark = benchmark[["sample_id", 'QUERY.TOTAL', 'QUERY','QUERY.TP','QUERY.FP','TRUTH.FN','METRIC.Precision', 'METRIC.Recall','METRIC.F1_Score']] | |||

| benchmark.columns = ['Sample','sample_id','SNV number','INDEL number','SNV query','INDEL query','SNV TP','INDEL TP','SNV FP','INDEL FP','SNV FN','INDEL FN','SNV precision','INDEL precision','SNV recall','INDEL recall','SNV F1','INDEL F1'] | |||

| benchmark = benchmark[['Sample','SNV number','INDEL number','SNV query','INDEL query','SNV TP','INDEL TP','SNV FP','INDEL FP','SNV FN','INDEL FN','SNV precision','INDEL precision','SNV recall','INDEL recall','SNV F1','INDEL F1']] | |||

| benchmark['SNV precision'] = benchmark['SNV precision'].astype(float) | |||

| benchmark['INDEL precision'] = benchmark['INDEL precision'].astype(float) | |||

| benchmark['SNV recall'] = benchmark['SNV recall'].astype(float) | |||

| benchmark['INDEL recall'] = benchmark['INDEL recall'].astype(float) | |||

| benchmark['SNV F1'] = benchmark['SNV F1'].astype(float) | |||

| benchmark['INDEL F1'] = benchmark['INDEL F1'].astype(float) | |||

| benchmark = benchmark.round(2) | |||

| benchmark.to_csv('variants.calling.qc.txt',sep="\t",index=0) | |||

| #all_rep = [x.split('_')[6] for x in benchmark['Sample']] | |||

| #if all_rep.count(all_rep[0]) == 4: | |||

| # rep = list(set(all_rep)) | |||

| # columns = ['Family','Average Precision','Average Recall','Precison SD','Recall SD'] | |||

| # df_ = pd.DataFrame(columns=columns) | |||

| # for i in rep: | |||

| # string = "_" + i + "_" | |||

| # sub_dat = benchmark[benchmark['Sample'].str.contains('_1_')] | |||

| # mean = list(sub_dat.mean(axis = 0, skipna = True)) | |||

| # sd = list(sub_dat.std(axis = 0, skipna = True)) | |||

| # family_name = project_name + "." + i + ".SNV" | |||

| # df_ = df_.append({'Family': family_name, 'Average Precision': mean[0], 'Average Recall': mean[2], 'Precison SD': sd[0], 'Recall SD': sd[2] }, ignore_index=True) | |||

| # family_name = project_name + "." + i + ".INDEL" | |||

| # df_ = df_.append({'Family': family_name, 'Average Precision': mean[1], 'Average Recall': mean[3], 'Precison SD': sd[1], 'Recall SD': sd[3] }, ignore_index=True) | |||

| # df_ = df_.round(2) | |||

| # df_.to_csv('precision.recall.txt',sep="\t",index=0) | |||

| #else: | |||

| # pass | |||

+ 92

- 0

codescripts/extract_vcf_information.py

View File

| @@ -0,0 +1,92 @@ | |||

| import sys,getopt | |||

| import os | |||

| import re | |||

| import fileinput | |||

| import pandas as pd | |||

| def usage(): | |||

| print( | |||

| """ | |||

| Usage: python extract_vcf_information.py -i input_merged_vcf_file -o parsed_file | |||

| This script will extract SNVs and Indels information from the vcf files and output a tab-delimited files. | |||

| Input: | |||

| -i the selected vcf file | |||

| Output: | |||

| -o tab-delimited parsed file | |||

| """) | |||

| # select supported small variants | |||

| def process(oneLine): | |||

| line = oneLine.rstrip() | |||

| strings = line.strip().split('\t') | |||

| infoParsed = parse_INFO(strings[7]) | |||

| formatKeys = strings[8].split(':') | |||

| formatValues = strings[9].split(':') | |||

| for i in range(0,len(formatKeys) -1) : | |||

| if formatKeys[i] == 'AD': | |||

| ra = formatValues[i].split(',') | |||

| infoParsed['RefDP'] = ra[0] | |||

| infoParsed['AltDP'] = ra[1] | |||

| if (int(ra[1]) + int(ra[0])) != 0: | |||

| infoParsed['af'] = float(int(ra[1])/(int(ra[1]) + int(ra[0]))) | |||

| else: | |||

| pass | |||

| else: | |||

| infoParsed[formatKeys[i]] = formatValues[i] | |||

| infoParsed['chromo'] = strings[0] | |||

| infoParsed['pos'] = strings[1] | |||

| infoParsed['id'] = strings[2] | |||

| infoParsed['ref'] = strings[3] | |||

| infoParsed['alt'] = strings[4] | |||

| infoParsed['qual'] = strings[5] | |||

| return infoParsed | |||

| def parse_INFO(info): | |||

| strings = info.strip().split(';') | |||

| keys = [] | |||

| values = [] | |||

| for i in strings: | |||

| kv = i.split('=') | |||

| if kv[0] == 'DB': | |||

| keys.append('DB') | |||

| values.append('1') | |||

| elif kv[0] == 'AF': | |||

| pass | |||

| else: | |||

| keys.append(kv[0]) | |||

| values.append(kv[1]) | |||

| infoDict = dict(zip(keys, values)) | |||

| return infoDict | |||

| opts,args = getopt.getopt(sys.argv[1:],"hi:o:") | |||

| for op,value in opts: | |||

| if op == "-i": | |||

| inputFile=value | |||

| elif op == "-o": | |||

| outputFile=value | |||

| elif op == "-h": | |||

| usage() | |||

| sys.exit() | |||

| if len(sys.argv[1:]) < 3: | |||

| usage() | |||

| sys.exit() | |||

| allDict = [] | |||

| for line in fileinput.input(inputFile): | |||

| m = re.match('^\#',line) | |||

| if m is not None: | |||

| pass | |||

| else: | |||

| oneDict = process(line) | |||

| allDict.append(oneDict) | |||

| allTable = pd.DataFrame(allDict) | |||

| allTable.to_csv(outputFile,sep='\t',index=False) | |||

+ 47

- 0

codescripts/filter_indel_over_50_cluster.py

View File

| @@ -0,0 +1,47 @@ | |||

| import sys,getopt | |||

| from itertools import islice | |||

| over_50_outfile = open("indel_lenth_over_50.txt",'w') | |||

| less_50_outfile = open("benchmark.men.vote.diffbed.lengthlessthan50.txt","w") | |||

| def process(line): | |||

| strings = line.strip().split('\t') | |||

| #d5 | |||

| if ',' in strings[6]: | |||

| d5 = strings[6].split(',') | |||

| d5_len = [len(i) for i in d5] | |||

| d5_alt = max(d5_len) | |||

| else: | |||

| d5_alt = len(strings[6]) | |||

| #d6 | |||

| if ',' in strings[14]: | |||

| d6 = strings[14].split(',') | |||

| d6_len = [len(i) for i in d6] | |||

| d6_alt = max(d6_len) | |||

| else: | |||

| d6_alt = len(strings[14]) | |||

| #f7 | |||

| if ',' in strings[22]: | |||

| f7 = strings[22].split(',') | |||

| f7_len = [len(i) for i in f7] | |||

| f7_alt = max(f7_len) | |||

| else: | |||

| f7_alt = len(strings[22]) | |||

| #m8 | |||

| if ',' in strings[30]: | |||

| m8 = strings[30].split(',') | |||

| m8_len = [len(i) for i in m8] | |||

| m8_alt = max(m8_len) | |||

| else: | |||

| m8_alt = len(strings[30]) | |||

| #ref | |||

| ref_len = len(strings[34]) | |||

| if (d5_alt > 50) or (d6_alt > 50) or (f7_alt > 50) or (m8_alt > 50) or (ref_len > 50): | |||

| over_50_outfile.write(line) | |||

| else: | |||

| less_50_outfile.write(line) | |||

| input_file = open(sys.argv[1]) | |||

| for line in islice(input_file, 1, None): | |||

| process(line) | |||

+ 43

- 0

codescripts/filter_indel_over_50_mendelian.py

View File

| @@ -0,0 +1,43 @@ | |||

| from itertools import islice | |||

| import fileinput | |||

| import sys, argparse, os | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to exclude indel over 50bp") | |||

| parser.add_argument('-i', '--mergedGVCF', type=str, help='merged gVCF txt with only chr, pos, ref, alt and genotypes', required=True) | |||

| parser.add_argument('-prefix', '--prefix', type=str, help='prefix of output file', required=True) | |||

| args = parser.parse_args() | |||

| input_dat = args.mergedGVCF | |||

| prefix = args.prefix | |||

| # output file | |||

| output_name = prefix + '.indel.lessthan50bp.txt' | |||

| outfile = open(output_name,'w') | |||

| def process(line): | |||

| strings = line.strip().split('\t') | |||

| #d5 | |||

| if ',' in strings[3]: | |||

| alt = strings[3].split(',') | |||

| alt_len = [len(i) for i in alt] | |||

| alt_max = max(alt_len) | |||

| else: | |||

| alt_max = len(strings[3]) | |||

| #ref | |||

| ref_len = len(strings[2]) | |||

| if (alt_max > 50) or (ref_len > 50): | |||

| pass | |||

| else: | |||

| outfile.write(line) | |||

| for line in fileinput.input(input_dat): | |||

| process(line) | |||

+ 416

- 0

codescripts/high_confidence_call_vote.py

View File

| @@ -0,0 +1,416 @@ | |||

| from __future__ import division | |||

| import sys, argparse, os | |||

| import fileinput | |||

| import re | |||

| import pandas as pd | |||

| from operator import itemgetter | |||

| from collections import Counter | |||

| from itertools import islice | |||

| from numpy import * | |||

| import statistics | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to count voting number") | |||

| parser.add_argument('-vcf', '--multi_sample_vcf', type=str, help='The VCF file you want to count the voting number', required=True) | |||

| parser.add_argument('-dup', '--dup_list', type=str, help='Duplication list', required=True) | |||

| parser.add_argument('-sample', '--sample_name', type=str, help='which sample of quartet', required=True) | |||

| parser.add_argument('-prefix', '--prefix', type=str, help='Prefix of output file name', required=True) | |||

| args = parser.parse_args() | |||

| multi_sample_vcf = args.multi_sample_vcf | |||

| dup_list = args.dup_list | |||

| prefix = args.prefix | |||

| sample_name = args.sample_name | |||

| vcf_header = '''##fileformat=VCFv4.2 | |||

| ##fileDate=20200331 | |||

| ##source=high_confidence_calls_intergration(choppy app) | |||

| ##reference=GRCh38.d1.vd1 | |||

| ##INFO=<ID=location,Number=1,Type=String,Description="Repeat region"> | |||

| ##INFO=<ID=DETECTED,Number=1,Type=Integer,Description="Number of detected votes"> | |||

| ##INFO=<ID=VOTED,Number=1,Type=Integer,Description="Number of consnesus votes"> | |||

| ##INFO=<ID=FAM,Number=1,Type=Integer,Description="Number mendelian consisitent votes"> | |||

| ##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype"> | |||

| ##FORMAT=<ID=DP,Number=1,Type=Integer,Description="Sum depth of all samples"> | |||

| ##FORMAT=<ID=ALT,Number=1,Type=Integer,Description="Sum alternative depth of all samples"> | |||

| ##FORMAT=<ID=AF,Number=1,Type=Float,Description="Allele frequency, sum alternative depth / sum depth"> | |||

| ##FORMAT=<ID=GQ,Number=1,Type=Float,Description="Average genotype quality"> | |||

| ##FORMAT=<ID=QD,Number=1,Type=Float,Description="Average Variant Confidence/Quality by Depth"> | |||

| ##FORMAT=<ID=MQ,Number=1,Type=Float,Description="Average mapping quality"> | |||

| ##FORMAT=<ID=FS,Number=1,Type=Float,Description="Average Phred-scaled p-value using Fisher's exact test to detect strand bias"> | |||

| ##FORMAT=<ID=QUALI,Number=1,Type=Float,Description="Average variant quality"> | |||

| ##contig=<ID=chr1,length=248956422> | |||

| ##contig=<ID=chr2,length=242193529> | |||

| ##contig=<ID=chr3,length=198295559> | |||

| ##contig=<ID=chr4,length=190214555> | |||

| ##contig=<ID=chr5,length=181538259> | |||

| ##contig=<ID=chr6,length=170805979> | |||

| ##contig=<ID=chr7,length=159345973> | |||

| ##contig=<ID=chr8,length=145138636> | |||

| ##contig=<ID=chr9,length=138394717> | |||

| ##contig=<ID=chr10,length=133797422> | |||

| ##contig=<ID=chr11,length=135086622> | |||

| ##contig=<ID=chr12,length=133275309> | |||

| ##contig=<ID=chr13,length=114364328> | |||

| ##contig=<ID=chr14,length=107043718> | |||

| ##contig=<ID=chr15,length=101991189> | |||

| ##contig=<ID=chr16,length=90338345> | |||

| ##contig=<ID=chr17,length=83257441> | |||

| ##contig=<ID=chr18,length=80373285> | |||

| ##contig=<ID=chr19,length=58617616> | |||

| ##contig=<ID=chr20,length=64444167> | |||

| ##contig=<ID=chr21,length=46709983> | |||

| ##contig=<ID=chr22,length=50818468> | |||

| ##contig=<ID=chrX,length=156040895> | |||

| ''' | |||

| vcf_header_all_sample = '''##fileformat=VCFv4.2 | |||

| ##fileDate=20200331 | |||

| ##reference=GRCh38.d1.vd1 | |||

| ##INFO=<ID=location,Number=1,Type=String,Description="Repeat region"> | |||

| ##INFO=<ID=DUP,Number=1,Type=Flag,Description="Duplicated variant records"> | |||

| ##INFO=<ID=DETECTED,Number=1,Type=Integer,Description="Number of detected votes"> | |||

| ##INFO=<ID=VOTED,Number=1,Type=Integer,Description="Number of consnesus votes"> | |||

| ##INFO=<ID=FAM,Number=1,Type=Integer,Description="Number mendelian consisitent votes"> | |||

| ##INFO=<ID=ALL_ALT,Number=1,Type=Float,Description="Sum of alternative reads of all samples"> | |||

| ##INFO=<ID=ALL_DP,Number=1,Type=Float,Description="Sum of depth of all samples"> | |||

| ##INFO=<ID=ALL_AF,Number=1,Type=Float,Description="Allele frequency of net alternatice reads and net depth"> | |||

| ##INFO=<ID=GQ_MEAN,Number=1,Type=Float,Description="Mean of genotype quality of all samples"> | |||

| ##INFO=<ID=QD_MEAN,Number=1,Type=Float,Description="Average Variant Confidence/Quality by Depth"> | |||

| ##INFO=<ID=MQ_MEAN,Number=1,Type=Float,Description="Mean of mapping quality of all samples"> | |||

| ##INFO=<ID=FS_MEAN,Number=1,Type=Float,Description="Average Phred-scaled p-value using Fisher's exact test to detect strand bias"> | |||

| ##INFO=<ID=QUAL_MEAN,Number=1,Type=Float,Description="Average variant quality"> | |||

| ##INFO=<ID=PCR,Number=1,Type=String,Description="Consensus of PCR votes"> | |||

| ##INFO=<ID=PCR_FREE,Number=1,Type=String,Description="Consensus of PCR-free votes"> | |||

| ##INFO=<ID=CONSENSUS,Number=1,Type=String,Description="Consensus calls"> | |||

| ##INFO=<ID=CONSENSUS_SEQ,Number=1,Type=String,Description="Consensus sequence"> | |||

| ##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype"> | |||

| ##FORMAT=<ID=DP,Number=1,Type=String,Description="Depth"> | |||

| ##FORMAT=<ID=ALT,Number=1,Type=Integer,Description="Alternative Depth"> | |||

| ##FORMAT=<ID=AF,Number=1,Type=String,Description="Allele frequency"> | |||

| ##FORMAT=<ID=GQ,Number=1,Type=String,Description="Genotype quality"> | |||

| ##FORMAT=<ID=MQ,Number=1,Type=String,Description="Mapping quality"> | |||

| ##FORMAT=<ID=TWINS,Number=1,Type=String,Description="1 is twins shared, 0 is twins discordant "> | |||

| ##FORMAT=<ID=TRIO5,Number=1,Type=String,Description="1 is LCL7, LCL8 and LCL5 mendelian consistent, 0 is mendelian vioaltion"> | |||

| ##FORMAT=<ID=TRIO6,Number=1,Type=String,Description="1 is LCL7, LCL8 and LCL6 mendelian consistent, 0 is mendelian vioaltion"> | |||

| ##contig=<ID=chr1,length=248956422> | |||

| ##contig=<ID=chr2,length=242193529> | |||

| ##contig=<ID=chr3,length=198295559> | |||

| ##contig=<ID=chr4,length=190214555> | |||

| ##contig=<ID=chr5,length=181538259> | |||

| ##contig=<ID=chr6,length=170805979> | |||

| ##contig=<ID=chr7,length=159345973> | |||

| ##contig=<ID=chr8,length=145138636> | |||

| ##contig=<ID=chr9,length=138394717> | |||

| ##contig=<ID=chr10,length=133797422> | |||

| ##contig=<ID=chr11,length=135086622> | |||

| ##contig=<ID=chr12,length=133275309> | |||

| ##contig=<ID=chr13,length=114364328> | |||

| ##contig=<ID=chr14,length=107043718> | |||

| ##contig=<ID=chr15,length=101991189> | |||

| ##contig=<ID=chr16,length=90338345> | |||

| ##contig=<ID=chr17,length=83257441> | |||

| ##contig=<ID=chr18,length=80373285> | |||

| ##contig=<ID=chr19,length=58617616> | |||

| ##contig=<ID=chr20,length=64444167> | |||

| ##contig=<ID=chr21,length=46709983> | |||

| ##contig=<ID=chr22,length=50818468> | |||

| ##contig=<ID=chrX,length=156040895> | |||

| ''' | |||

| # read in duplication list | |||

| dup = pd.read_table(dup_list,header=None) | |||

| var_dup = dup[0].tolist() | |||

| # output file | |||

| benchmark_file_name = prefix + '_voted.vcf' | |||

| benchmark_outfile = open(benchmark_file_name,'w') | |||

| all_sample_file_name = prefix + '_all_sample_information.vcf' | |||

| all_sample_outfile = open(all_sample_file_name,'w') | |||

| # write VCF | |||

| outputcolumn = '#CHROM\tPOS\tID\tREF\tALT\tQUAL\tFILTER\tINFO\tFORMAT\t' + sample_name + '_benchmark_calls\n' | |||

| benchmark_outfile.write(vcf_header) | |||

| benchmark_outfile.write(outputcolumn) | |||

| outputcolumn_all_sample = '#CHROM\tPOS\tID\tREF\tALT\tQUAL\tFILTER\tINFO\tFORMAT\t'+ \ | |||

| 'Quartet_DNA_BGI_SEQ2000_BGI_1_20180518\tQuartet_DNA_BGI_SEQ2000_BGI_2_20180530\tQuartet_DNA_BGI_SEQ2000_BGI_3_20180530\t' + \ | |||

| 'Quartet_DNA_BGI_T7_WGE_1_20191105\tQuartet_DNA_BGI_T7_WGE_2_20191105\tQuartet_DNA_BGI_T7_WGE_3_20191105\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_ARD_1_20181108\tQuartet_DNA_ILM_Nova_ARD_2_20181108\tQuartet_DNA_ILM_Nova_ARD_3_20181108\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_ARD_4_20190111\tQuartet_DNA_ILM_Nova_ARD_5_20190111\tQuartet_DNA_ILM_Nova_ARD_6_20190111\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_BRG_1_20180930\tQuartet_DNA_ILM_Nova_BRG_2_20180930\tQuartet_DNA_ILM_Nova_BRG_3_20180930\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_WUX_1_20190917\tQuartet_DNA_ILM_Nova_WUX_2_20190917\tQuartet_DNA_ILM_Nova_WUX_3_20190917\t' + \ | |||

| 'Quartet_DNA_ILM_XTen_ARD_1_20170403\tQuartet_DNA_ILM_XTen_ARD_2_20170403\tQuartet_DNA_ILM_XTen_ARD_3_20170403\t' + \ | |||

| 'Quartet_DNA_ILM_XTen_NVG_1_20170329\tQuartet_DNA_ILM_XTen_NVG_2_20170329\tQuartet_DNA_ILM_XTen_NVG_3_20170329\t' + \ | |||

| 'Quartet_DNA_ILM_XTen_WUX_1_20170216\tQuartet_DNA_ILM_XTen_WUX_2_20170216\tQuartet_DNA_ILM_XTen_WUX_3_20170216\n' | |||

| all_sample_outfile.write(vcf_header_all_sample) | |||

| all_sample_outfile.write(outputcolumn_all_sample) | |||

| #function | |||

| def replace_nan(strings_list): | |||

| updated_list = [] | |||

| for i in strings_list: | |||

| if i == '.': | |||

| updated_list.append('.:.:.:.:.:.:.:.:.:.:.:.') | |||

| else: | |||

| updated_list.append(i) | |||

| return updated_list | |||

| def remove_dot(strings_list): | |||

| updated_list = [] | |||

| for i in strings_list: | |||

| if i == '.': | |||

| pass | |||

| else: | |||

| updated_list.append(i) | |||

| return updated_list | |||

| def detected_number(strings): | |||

| gt = [x.split(':')[0] for x in strings] | |||

| percentage = 27 - gt.count('.') | |||

| return(str(percentage)) | |||

| def vote_number(strings,consensus_call): | |||

| gt = [x.split(':')[0] for x in strings] | |||

| gt = [x.replace('.','0/0') for x in gt] | |||

| gt = list(map(gt_uniform,[i for i in gt])) | |||

| vote_num = gt.count(consensus_call) | |||

| return(str(vote_num)) | |||

| def family_vote(strings,consensus_call): | |||

| gt = [x.split(':')[0] for x in strings] | |||

| gt = [x.replace('.','0/0') for x in gt] | |||

| gt = list(map(gt_uniform,[i for i in gt])) | |||

| mendelian = [':'.join(x.split(':')[1:4]) for x in strings] | |||

| indices = [i for i, x in enumerate(gt) if x == consensus_call] | |||

| matched_mendelian = itemgetter(*indices)(mendelian) | |||

| mendelian_num = matched_mendelian.count('1:1:1') | |||

| return(str(mendelian_num)) | |||

| def gt_uniform(strings): | |||

| uniformed_gt = '' | |||

| allele1 = strings.split('/')[0] | |||

| allele2 = strings.split('/')[1] | |||

| if int(allele1) > int(allele2): | |||

| uniformed_gt = allele2 + '/' + allele1 | |||

| else: | |||

| uniformed_gt = allele1 + '/' + allele2 | |||

| return uniformed_gt | |||

| def decide_by_rep(strings): | |||

| consensus_rep = '' | |||

| mendelian = [':'.join(x.split(':')[1:4]) for x in strings] | |||

| gt = [x.split(':')[0] for x in strings] | |||

| gt = [x.replace('.','0/0') for x in gt] | |||

| # modified gt turn 2/1 to 1/2 | |||

| gt = list(map(gt_uniform,[i for i in gt])) | |||

| # mendelian consistent? | |||

| mendelian_dict = Counter(mendelian) | |||

| highest_mendelian = mendelian_dict.most_common(1) | |||

| candidate_mendelian = highest_mendelian[0][0] | |||

| freq_mendelian = highest_mendelian[0][1] | |||

| if (candidate_mendelian == '1:1:1') and (freq_mendelian >= 2): | |||

| gt_num_dict = Counter(gt) | |||

| highest_gt = gt_num_dict.most_common(1) | |||

| candidate_gt = highest_gt[0][0] | |||

| freq_gt = highest_gt[0][1] | |||

| if (candidate_gt != '0/0') and (freq_gt >= 2): | |||

| consensus_rep = candidate_gt | |||

| elif (candidate_gt == '0/0') and (freq_gt >= 2): | |||

| consensus_rep = '0/0' | |||

| else: | |||

| consensus_rep = 'inconGT' | |||

| elif (candidate_mendelian == '') and (freq_mendelian >= 2): | |||

| consensus_rep = 'noInfo' | |||

| else: | |||

| consensus_rep = 'inconMen' | |||

| return consensus_rep | |||

| def main(): | |||

| for line in fileinput.input(multi_sample_vcf): | |||

| headline = re.match('^\#',line) | |||

| if headline is not None: | |||

| pass | |||

| else: | |||

| line = line.strip() | |||

| strings = line.split('\t') | |||

| variant_id = '_'.join([strings[0],strings[1]]) | |||

| # check if the variants location is duplicated | |||

| if variant_id in var_dup: | |||

| strings[7] = strings[7] + ';DUP' | |||

| outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(outLine) | |||

| else: | |||

| # pre-define | |||

| pcr_consensus = '.' | |||

| pcr_free_consensus = '.' | |||

| consensus_call = '.' | |||

| consensus_alt_seq = '.' | |||

| # pcr | |||

| strings[9:] = replace_nan(strings[9:]) | |||

| pcr = itemgetter(*[9,10,11,27,28,29,30,31,32,33,34,35])(strings) | |||

| SEQ2000 = decide_by_rep(pcr[0:3]) | |||

| XTen_ARD = decide_by_rep(pcr[3:6]) | |||

| XTen_NVG = decide_by_rep(pcr[6:9]) | |||

| XTen_WUX = decide_by_rep(pcr[9:12]) | |||

| sequence_site = [SEQ2000,XTen_ARD,XTen_NVG,XTen_WUX] | |||

| sequence_dict = Counter(sequence_site) | |||

| highest_sequence = sequence_dict.most_common(1) | |||

| candidate_sequence = highest_sequence[0][0] | |||

| freq_sequence = highest_sequence[0][1] | |||

| if freq_sequence > 2: | |||

| pcr_consensus = candidate_sequence | |||

| else: | |||

| pcr_consensus = 'inconSequenceSite' | |||

| # pcr-free | |||

| pcr_free = itemgetter(*[12,13,14,15,16,17,18,19,20,21,22,23,24,25,26])(strings) | |||

| T7_WGE = decide_by_rep(pcr_free[0:3]) | |||

| Nova_ARD_1 = decide_by_rep(pcr_free[3:6]) | |||

| Nova_ARD_2 = decide_by_rep(pcr_free[6:9]) | |||

| Nova_BRG = decide_by_rep(pcr_free[9:12]) | |||

| Nova_WUX = decide_by_rep(pcr_free[12:15]) | |||

| sequence_site = [T7_WGE,Nova_ARD_1,Nova_ARD_2,Nova_BRG,Nova_WUX] | |||

| highest_sequence = sequence_dict.most_common(1) | |||

| candidate_sequence = highest_sequence[0][0] | |||

| freq_sequence = highest_sequence[0][1] | |||

| if freq_sequence > 3: | |||

| pcr_free_consensus = candidate_sequence | |||

| else: | |||

| pcr_free_consensus = 'inconSequenceSite' | |||

| # pcr and pcr-free | |||

| tag = ['inconGT','noInfo','inconMen','inconSequenceSite'] | |||

| if (pcr_consensus == pcr_free_consensus) and (pcr_consensus not in tag) and (pcr_consensus != '0/0'): | |||

| consensus_call = pcr_consensus | |||

| VOTED = vote_number(strings[9:],consensus_call) | |||

| strings[7] = strings[7] + ';VOTED=' + VOTED | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| FAM = family_vote(strings[9:],consensus_call) | |||

| strings[7] = strings[7] + ';FAM=' + FAM | |||

| # Delete multiple alternative genotype to necessary expression | |||

| alt = strings[4] | |||

| alt_gt = alt.split(',') | |||

| if len(alt_gt) > 1: | |||

| allele1 = consensus_call.split('/')[0] | |||

| allele2 = consensus_call.split('/')[1] | |||

| if allele1 == '0': | |||

| allele2_seq = alt_gt[int(allele2) - 1] | |||

| consensus_alt_seq = allele2_seq | |||

| consensus_call = '0/1' | |||

| else: | |||

| allele1_seq = alt_gt[int(allele1) - 1] | |||

| allele2_seq = alt_gt[int(allele2) - 1] | |||

| if int(allele1) > int(allele2): | |||

| consensus_alt_seq = allele2_seq + ',' + allele1_seq | |||

| consensus_call = '1/2' | |||

| elif int(allele1) < int(allele2): | |||

| consensus_alt_seq = allele1_seq + ',' + allele2_seq | |||

| consensus_call = '1/2' | |||

| else: | |||

| consensus_alt_seq = allele1_seq | |||

| consensus_call = '1/1' | |||

| else: | |||

| consensus_alt_seq = alt | |||

| # GT:DP:ALT:AF:GQ:QD:MQ:FS:QUAL | |||

| # GT:TWINS:TRIO5:TRIO6:DP:ALT:AF:GQ:QD:MQ:FS:QUAL:rawGT | |||

| # DP | |||

| DP = [x.split(':')[4] for x in strings[9:]] | |||

| DP = remove_dot(DP) | |||

| DP = [int(x) for x in DP] | |||

| ALL_DP = sum(DP) | |||

| # AF | |||

| ALT = [x.split(':')[5] for x in strings[9:]] | |||

| ALT = remove_dot(ALT) | |||

| ALT = [int(x) for x in ALT] | |||

| ALL_ALT = sum(ALT) | |||

| ALL_AF = round(ALL_ALT/ALL_DP,2) | |||

| # GQ | |||

| GQ = [x.split(':')[7] for x in strings[9:]] | |||

| GQ = remove_dot(GQ) | |||

| GQ = [int(x) for x in GQ] | |||

| GQ_MEAN = round(mean(GQ),2) | |||

| # QD | |||

| QD = [x.split(':')[8] for x in strings[9:]] | |||

| QD = remove_dot(QD) | |||

| QD = [float(x) for x in QD] | |||

| QD_MEAN = round(mean(QD),2) | |||

| # MQ | |||

| MQ = [x.split(':')[9] for x in strings[9:]] | |||

| MQ = remove_dot(MQ) | |||

| MQ = [float(x) for x in MQ] | |||

| MQ_MEAN = round(mean(MQ),2) | |||

| # FS | |||

| FS = [x.split(':')[10] for x in strings[9:]] | |||

| FS = remove_dot(FS) | |||

| FS = [float(x) for x in FS] | |||

| FS_MEAN = round(mean(FS),2) | |||

| # QUAL | |||

| QUAL = [x.split(':')[11] for x in strings[9:]] | |||

| QUAL = remove_dot(QUAL) | |||

| QUAL = [float(x) for x in QUAL] | |||

| QUAL_MEAN = round(mean(QUAL),2) | |||

| # benchmark output | |||

| output_format = consensus_call + ':' + str(ALL_DP) + ':' + str(ALL_ALT) + ':' + str(ALL_AF) + ':' + str(GQ_MEAN) + ':' + str(QD_MEAN) + ':' + str(MQ_MEAN) + ':' + str(FS_MEAN) + ':' + str(QUAL_MEAN) | |||

| outLine = strings[0] + '\t' + strings[1] + '\t' + strings[2] + '\t' + strings[3] + '\t' + consensus_alt_seq + '\t' + '.' + '\t' + '.' + '\t' + strings[7] + '\t' + 'GT:DP:ALT:AF:GQ:QD:MQ:FS:QUAL' + '\t' + output_format + '\n' | |||

| benchmark_outfile.write(outLine) | |||

| # all sample output | |||

| strings[7] = strings[7] + ';ALL_ALT=' + str(ALL_ALT) + ';ALL_DP=' + str(ALL_DP) + ';ALL_AF=' + str(ALL_AF) \ | |||

| + ';GQ_MEAN=' + str(GQ_MEAN) + ';QD_MEAN=' + str(QD_MEAN) + ';MQ_MEAN=' + str(MQ_MEAN) + ';FS_MEAN=' + str(FS_MEAN) \ | |||

| + ';QUAL_MEAN=' + str(QUAL_MEAN) + ';PCR=' + consensus_call + ';PCR_FREE=' + consensus_call + ';CONSENSUS=' + consensus_call \ | |||

| + ';CONSENSUS_SEQ=' + consensus_alt_seq | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif (pcr_consensus in tag) and (pcr_free_consensus in tag): | |||

| consensus_call = 'filtered' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif ((pcr_consensus == '0/0') or (pcr_consensus in tag)) and ((pcr_free_consensus not in tag) and (pcr_free_consensus != '0/0')): | |||

| consensus_call = 'pcr-free-speicifc' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif ((pcr_consensus != '0/0') or (pcr_consensus not in tag)) and ((pcr_free_consensus in tag) and (pcr_free_consensus == '0/0')): | |||

| consensus_call = 'pcr-speicifc' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call + ';PCR=' + pcr_consensus + ';PCR_FREE=' + pcr_free_consensus | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif (pcr_consensus == '0/0') and (pcr_free_consensus == '0/0'): | |||

| consensus_call = 'confirm for parents' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| else: | |||

| consensus_call = 'filtered' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| if __name__ == '__main__': | |||

| main() | |||

+ 42

- 0

codescripts/high_voted_mendelian_bed.py

View File

| @@ -0,0 +1,42 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| mut = pd.read_table(sys.argv[1]) | |||

| outFile = open(sys.argv[2],'w') | |||

| for row in mut.itertuples(): | |||

| #d5 | |||

| if ',' in row.V4: | |||

| alt = row.V4.split(',') | |||

| alt_len = [len(i) for i in alt] | |||

| alt_max = max(alt_len) | |||

| else: | |||

| alt_max = len(row.V4) | |||

| #d6 | |||

| alt = alt_max | |||

| ref = row.V3 | |||

| pos = int(row.V2) | |||

| if len(ref) == 1 and alt == 1: | |||

| StartPos = int(pos) -1 | |||

| EndPos = int(pos) | |||

| cate = 'SNV' | |||

| elif len(ref) > alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (len(ref) - 1) | |||

| cate = 'INDEL' | |||

| elif alt > len(ref): | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| elif len(ref) == alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| outline = row.V1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\t' + str(row.V2) + '\t' + cate + '\n' | |||

| outFile.write(outline) | |||

+ 50

- 0

codescripts/how_many_samples.py

View File

| @@ -0,0 +1,50 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| from operator import itemgetter | |||

| parser = argparse.ArgumentParser(description="This script is to get how many samples") | |||

| parser.add_argument('-sample', '--sample', type=str, help='quartet_sample', required=True) | |||

| parser.add_argument('-rep', '--rep', type=str, help='quartet_rep', required=True) | |||

| args = parser.parse_args() | |||

| # Rename input: | |||

| sample = args.sample | |||

| rep = args.rep | |||

| quartet_sample = pd.read_table(sample,header=None) | |||

| quartet_sample = list(quartet_sample[0]) | |||

| quartet_rep = pd.read_table(rep.header=None) | |||

| quartet_rep = quartet_rep[0] | |||

| #tags | |||

| sister_tag = 'false' | |||

| quartet_tag = 'false' | |||

| quartet_rep_unique = list(set(quartet_rep)) | |||

| single_rep = [i for i in range(len(quartet_rep)) if quartet_rep[i] == quartet_rep_unique[0]] | |||

| single_batch_sample = itemgetter(*single_rep)(quartet_sample) | |||

| num = len(single_batch_sample) | |||

| if num == 1: | |||

| sister_tag = 'false' | |||

| quartet_tag = 'false' | |||

| elif num == 2: | |||

| if set(single_batch_sample) == set(['LCL5','LCL6']): | |||

| sister_tag = 'true' | |||

| quartet_tag = 'false' | |||

| elif num == 3: | |||

| if ('LCL5' in single_batch_sample) and ('LCL6' in single_batch_sample): | |||

| sister_tag = 'true' | |||

| quartet_tag = 'false' | |||

| elif num == 4: | |||

| if set(single_batch_sample) == set(['LCL5','LCL6','LCL7','LCL8']): | |||

| sister_tag = 'false' | |||

| quartet_tag = 'true' | |||

| sister_outfile = open('sister_tag','w') | |||

| quartet_outfile = open('quartet_tag','w') | |||

| sister_outfile.write(sister_tag) | |||

| quartet_outfile.write(quartet_tag) | |||

+ 42

- 0

codescripts/lcl5_all_called_variants.py

View File

| @@ -0,0 +1,42 @@ | |||

| from __future__ import division | |||

| import sys, argparse, os | |||

| import pandas as pd | |||

| from collections import Counter | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to merge mendelian and vcfinfo, and extract high_confidence_calls") | |||

| parser.add_argument('-vcf', '--vcf', type=str, help='merged multiple sample vcf', required=True) | |||

| args = parser.parse_args() | |||

| vcf = args.vcf | |||

| lcl5_outfile = open('LCL5_all_variants.txt','w') | |||

| filtered_outfile = open('LCL5_filtered_variants.txt','w') | |||

| vcf_dat = pd.read_table(vcf) | |||

| for row in vcf_dat.itertuples(): | |||

| lcl5_list = [row.Quartet_DNA_BGI_SEQ2000_BGI_LCL5_1_20180518,row.Quartet_DNA_BGI_SEQ2000_BGI_LCL5_2_20180530,row.Quartet_DNA_BGI_SEQ2000_BGI_LCL5_3_20180530, \ | |||

| row.Quartet_DNA_BGI_T7_WGE_LCL5_1_20191105,row.Quartet_DNA_BGI_T7_WGE_LCL5_2_20191105,row.Quartet_DNA_BGI_T7_WGE_LCL5_3_20191105, \ | |||

| row.Quartet_DNA_ILM_Nova_ARD_LCL5_1_20181108,row.Quartet_DNA_ILM_Nova_ARD_LCL5_2_20181108,row.Quartet_DNA_ILM_Nova_ARD_LCL5_3_20181108, \ | |||

| row.Quartet_DNA_ILM_Nova_ARD_LCL5_4_20190111,row.Quartet_DNA_ILM_Nova_ARD_LCL5_5_20190111,row.Quartet_DNA_ILM_Nova_ARD_LCL5_6_20190111, \ | |||

| row.Quartet_DNA_ILM_Nova_BRG_LCL5_1_20180930,row.Quartet_DNA_ILM_Nova_BRG_LCL5_2_20180930,row.Quartet_DNA_ILM_Nova_BRG_LCL5_3_20180930, \ | |||

| row.Quartet_DNA_ILM_Nova_WUX_LCL5_1_20190917,row.Quartet_DNA_ILM_Nova_WUX_LCL5_2_20190917,row.Quartet_DNA_ILM_Nova_WUX_LCL5_3_20190917, \ | |||

| row.Quartet_DNA_ILM_XTen_ARD_LCL5_1_20170403,row.Quartet_DNA_ILM_XTen_ARD_LCL5_2_20170403,row.Quartet_DNA_ILM_XTen_ARD_LCL5_3_20170403, \ | |||

| row.Quartet_DNA_ILM_XTen_NVG_LCL5_1_20170329,row.Quartet_DNA_ILM_XTen_NVG_LCL5_2_20170329,row.Quartet_DNA_ILM_XTen_NVG_LCL5_3_20170329, \ | |||

| row.Quartet_DNA_ILM_XTen_WUX_LCL5_1_20170216,row.Quartet_DNA_ILM_XTen_WUX_LCL5_2_20170216,row.Quartet_DNA_ILM_XTen_WUX_LCL5_3_20170216] | |||

| lcl5_vcf_gt = [x.split(':')[0] for x in lcl5_list] | |||

| lcl5_gt=[item.replace('./.', '0/0') for item in lcl5_vcf_gt] | |||

| gt_dict = Counter(lcl5_gt) | |||

| highest_gt = gt_dict.most_common(1) | |||

| candidate_gt = highest_gt[0][0] | |||

| freq_gt = highest_gt[0][1] | |||

| output = row._1 + '\t' + str(row.POS) + '\t' + '\t'.join(lcl5_gt) + '\n' | |||

| if (candidate_gt == '0/0') and (freq_gt == 27): | |||

| filtered_outfile.write(output) | |||

| else: | |||

| lcl5_outfile.write(output) | |||

+ 6

- 0

codescripts/linux_command.sh

View File

| @@ -0,0 +1,6 @@ | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$7"\t.\t.\t.\tGT\t"$6}' | grep -v '2_y' > LCL5.body | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$15"\t.\t.\t.\tGT\t"$14}' | grep -v '2_y' > LCL6.body | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$23"\t.\t.\t.\tGT\t"$22}' | grep -v '2_y' > LCL7.body | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$31"\t.\t.\t.\tGT\t"$30}' | grep -v '2_y' > LCL8.body | |||

| for i in *txt; do cat $i | awk '{ if ((length($3) == 1) && (length($4) == 1)) { print } }' | grep -v '#' | cut -f3,4 | sort |uniq -c | sed 's/\s\+/\t/g' | cut -f2 > $i.mut; done | |||

+ 129

- 0

codescripts/merge_mendelian_vcfinfo.py

View File

| @@ -0,0 +1,129 @@ | |||

| from __future__ import division | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| import fileinput | |||

| import re | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to get final high confidence calls and information of all replicates") | |||

| parser.add_argument('-vcfInfo', '--vcfInfo', type=str, help='The txt file of variants information, this file is named as prefix__variant_quality_location.txt', required=True) | |||

| parser.add_argument('-mendelianInfo', '--mendelianInfo', type=str, help='The merged mendelian information of all samples', required=True) | |||

| parser.add_argument('-sample', '--sample_name', type=str, help='which sample of quartet', required=True) | |||

| args = parser.parse_args() | |||

| vcfInfo = args.vcfInfo | |||

| mendelianInfo = args.mendelianInfo | |||

| sample_name = args.sample_name | |||

| #GT:TWINS:TRIO5:TRIO6:DP:AF:GQ:QD:MQ:FS:QUAL | |||

| vcf_header = '''##fileformat=VCFv4.2 | |||

| ##fileDate=20200331 | |||

| ##source=high_confidence_calls_intergration(choppy app) | |||

| ##reference=GRCh38.d1.vd1 | |||

| ##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype"> | |||

| ##FORMAT=<ID=TWINS,Number=1,Type=Flag,Description="1 for sister consistent, 0 for sister different"> | |||

| ##FORMAT=<ID=TRIO5,Number=1,Type=Flag,Description="1 for LCL7, LCL8 and LCL5 mendelian consistent, 0 for family violation"> | |||

| ##FORMAT=<ID=TRIO6,Number=1,Type=Flag,Description="1 for LCL7, LCL8 and LCL6 mendelian consistent, 0 for family violation"> | |||

| ##FORMAT=<ID=DP,Number=1,Type=Integer,Description="Depth"> | |||

| ##FORMAT=<ID=ALT,Number=1,Type=Integer,Description="Alternative Depth"> | |||

| ##FORMAT=<ID=AF,Number=1,Type=Float,Description="Allele frequency"> | |||

| ##FORMAT=<ID=GQ,Number=1,Type=Integer,Description="Genotype quality"> | |||

| ##FORMAT=<ID=QD,Number=1,Type=Float,Description="Variant Confidence/Quality by Depth"> | |||

| ##FORMAT=<ID=MQ,Number=1,Type=Float,Description="Mapping quality"> | |||

| ##FORMAT=<ID=FS,Number=1,Type=Float,Description="Phred-scaled p-value using Fisher's exact test to detect strand bias"> | |||

| ##FORMAT=<ID=QUAL,Number=1,Type=Float,Description="variant quality"> | |||

| ##contig=<ID=chr1,length=248956422> | |||

| ##contig=<ID=chr2,length=242193529> | |||

| ##contig=<ID=chr3,length=198295559> | |||

| ##contig=<ID=chr4,length=190214555> | |||

| ##contig=<ID=chr5,length=181538259> | |||

| ##contig=<ID=chr6,length=170805979> | |||

| ##contig=<ID=chr7,length=159345973> | |||

| ##contig=<ID=chr8,length=145138636> | |||

| ##contig=<ID=chr9,length=138394717> | |||

| ##contig=<ID=chr10,length=133797422> | |||

| ##contig=<ID=chr11,length=135086622> | |||

| ##contig=<ID=chr12,length=133275309> | |||

| ##contig=<ID=chr13,length=114364328> | |||

| ##contig=<ID=chr14,length=107043718> | |||

| ##contig=<ID=chr15,length=101991189> | |||

| ##contig=<ID=chr16,length=90338345> | |||

| ##contig=<ID=chr17,length=83257441> | |||

| ##contig=<ID=chr18,length=80373285> | |||

| ##contig=<ID=chr19,length=58617616> | |||

| ##contig=<ID=chr20,length=64444167> | |||

| ##contig=<ID=chr21,length=46709983> | |||

| ##contig=<ID=chr22,length=50818468> | |||

| ##contig=<ID=chrX,length=156040895> | |||

| ''' | |||

| # output file | |||

| file_name = sample_name + '_mendelian_vcfInfo.vcf' | |||

| outfile = open(file_name,'w') | |||

| outputcolumn = '#CHROM\tPOS\tID\tREF\tALT\tQUAL\tFILTER\tINFO\tFORMAT\t' + sample_name + '\n' | |||

| outfile.write(vcf_header) | |||

| outfile.write(outputcolumn) | |||

| # input files | |||

| vcf_info = pd.read_table(vcfInfo) | |||

| mendelian_info = pd.read_table(mendelianInfo) | |||

| merged_df = pd.merge(vcf_info, mendelian_info, how='outer', left_on=['#CHROM','POS'], right_on = ['#CHROM','POS']) | |||

| merged_df = merged_df.fillna('.') | |||

| # | |||

| def parse_INFO(info): | |||

| strings = info.strip().split(';') | |||

| keys = [] | |||

| values = [] | |||

| for i in strings: | |||

| kv = i.split('=') | |||

| if kv[0] == 'DB': | |||

| keys.append('DB') | |||

| values.append('1') | |||

| else: | |||

| keys.append(kv[0]) | |||

| values.append(kv[1]) | |||

| infoDict = dict(zip(keys, values)) | |||

| return infoDict | |||

| # | |||

| for row in merged_df.itertuples(): | |||

| if row[18] != '.': | |||

| # format | |||

| # GT:TWINS:TRIO5:TRIO6:DP:AF:GQ:QD:MQ:FS:QUAL | |||

| FORMAT_x = row[10].split(':') | |||

| ALT = int(FORMAT_x[1].split(',')[1]) | |||

| if int(FORMAT_x[2]) != 0: | |||

| AF = round(ALT/int(FORMAT_x[2]),2) | |||

| else: | |||

| AF = '.' | |||

| INFO_x = parse_INFO(row.INFO_x) | |||

| if FORMAT_x[2] == '0': | |||

| INFO_x['QD'] = '.' | |||

| else: | |||

| pass | |||

| FORMAT = row[18] + ':' + FORMAT_x[2] + ':' + str(ALT) + ':' + str(AF) + ':' + FORMAT_x[3] + ':' + INFO_x['QD'] + ':' + INFO_x['MQ'] + ':' + INFO_x['FS'] + ':' + str(row.QUAL_x) | |||

| # outline | |||

| outline = row._1 + '\t' + str(row.POS) + '\t' + row.ID_x + '\t' + row.REF_y + '\t' + row.ALT_y + '\t' + '.' + '\t' + '.' + '\t' + '.' + '\t' + 'GT:TWINS:TRIO5:TRIO6:DP:ALT:AF:GQ:QD:MQ:FS:QUAL' + '\t' + FORMAT + '\n' | |||

| else: | |||

| rawGT = row[10].split(':') | |||

| FORMAT_x = row[10].split(':') | |||

| ALT = int(FORMAT_x[1].split(',')[1]) | |||

| if int(FORMAT_x[2]) != 0: | |||

| AF = round(ALT/int(FORMAT_x[2]),2) | |||

| else: | |||

| AF = '.' | |||

| INFO_x = parse_INFO(row.INFO_x) | |||

| if FORMAT_x[2] == '0': | |||

| INFO_x['QD'] = '.' | |||

| else: | |||

| pass | |||

| FORMAT = '.:.:.:.' + ':' + FORMAT_x[2] + ':' + str(ALT) + ':' + str(AF) + ':' + FORMAT_x[3] + ':' + INFO_x['QD'] + ':' + INFO_x['MQ'] + ':' + INFO_x['FS'] + ':' + str(row.QUAL_x) + ':' + rawGT[0] | |||

| # outline | |||

| outline = row._1 + '\t' + str(row.POS) + '\t' + row.ID_x + '\t' + row.REF_x + '\t' + row.ALT_x + '\t' + '.' + '\t' + '.' + '\t' + '.' + '\t' + 'GT:TWINS:TRIO5:TRIO6:DP:ALT:AF:GQ:QD:MQ:FS:QUAL:rawGT' + '\t' + FORMAT + '\n' | |||

| outfile.write(outline) | |||

+ 71

- 0

codescripts/merge_two_family.py

View File

| @@ -0,0 +1,71 @@ | |||

| from __future__ import division | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| import fileinput | |||

| import re | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to extract mendelian concordance information") | |||

| parser.add_argument('-LCL5', '--LCL5', type=str, help='LCL5 family info', required=True) | |||

| parser.add_argument('-LCL6', '--LCL6', type=str, help='LCL6 family info', required=True) | |||

| parser.add_argument('-family', '--family', type=str, help='family name', required=True) | |||

| args = parser.parse_args() | |||

| lcl5 = args.LCL5 | |||

| lcl6 = args.LCL6 | |||

| family = args.family | |||

| # output file | |||

| family_name = family + '.txt' | |||

| family_file = open(family_name,'w') | |||

| # input files | |||

| lcl5_dat = pd.read_table(lcl5) | |||

| lcl6_dat = pd.read_table(lcl6) | |||

| merged_df = pd.merge(lcl5_dat, lcl6_dat, how='outer', left_on=['#CHROM','POS'], right_on = ['#CHROM','POS']) | |||

| def alt_seq(alt, genotype): | |||

| if genotype == './.': | |||

| seq = './.' | |||

| elif genotype == '0/0': | |||

| seq = '0/0' | |||

| else: | |||

| alt = alt.split(',') | |||

| genotype = genotype.split('/') | |||

| if genotype[0] == '0': | |||

| allele2 = alt[int(genotype[1]) - 1] | |||

| seq = '0/' + allele2 | |||

| else: | |||

| allele1 = alt[int(genotype[0]) - 1] | |||

| allele2 = alt[int(genotype[1]) - 1] | |||