комит

ad73c9d27a

60 измењених фајлова са 5029 додато и 0 уклоњено

+ 251

- 0

README.md

Прегледај датотеку

| @@ -0,0 +1,251 @@ | |||

| # Quality control of germline variants calling results using a Chinese Quartet family | |||

| > Author: Run Luyao | |||

| > | |||

| > E-mail:18110700050@fudan.edu.cn | |||

| > | |||

| > Git: http://47.103.223.233/renluyao/quartet_dna_quality_control_wgs_big_pipeline | |||

| > | |||

| > Last Updates: 2022/10/31 | |||

| ## Install | |||

| ``` | |||

| open-choppy-env | |||

| choppy install renluyao/quartet_dna_quality_control_big_pipeline | |||

| ``` | |||

| ## Introduction | |||

| ###Chinese Quartet DNA reference materials | |||

| With the rapid development of sequencing technology and the dramatic decrease of sequencing costs, DNA sequencing has been widely used in scientific research, diagnosis of and treatment selection for human diseases. However, due to the lack of effective quality assessment and control of the high-throughput omics data generation and analysis processes, variants calling results are seriously inconsistent among different technical replicates, batches, laboratories, sequencing platforms, and analysis pipelines, resulting in irreproducible scientific results and conclusions, huge waste of resources, and even endangering the life and health of patients. Therefore, reference materials for quality control of the whole process from omics data generation to data analysis are urgently needed. | |||

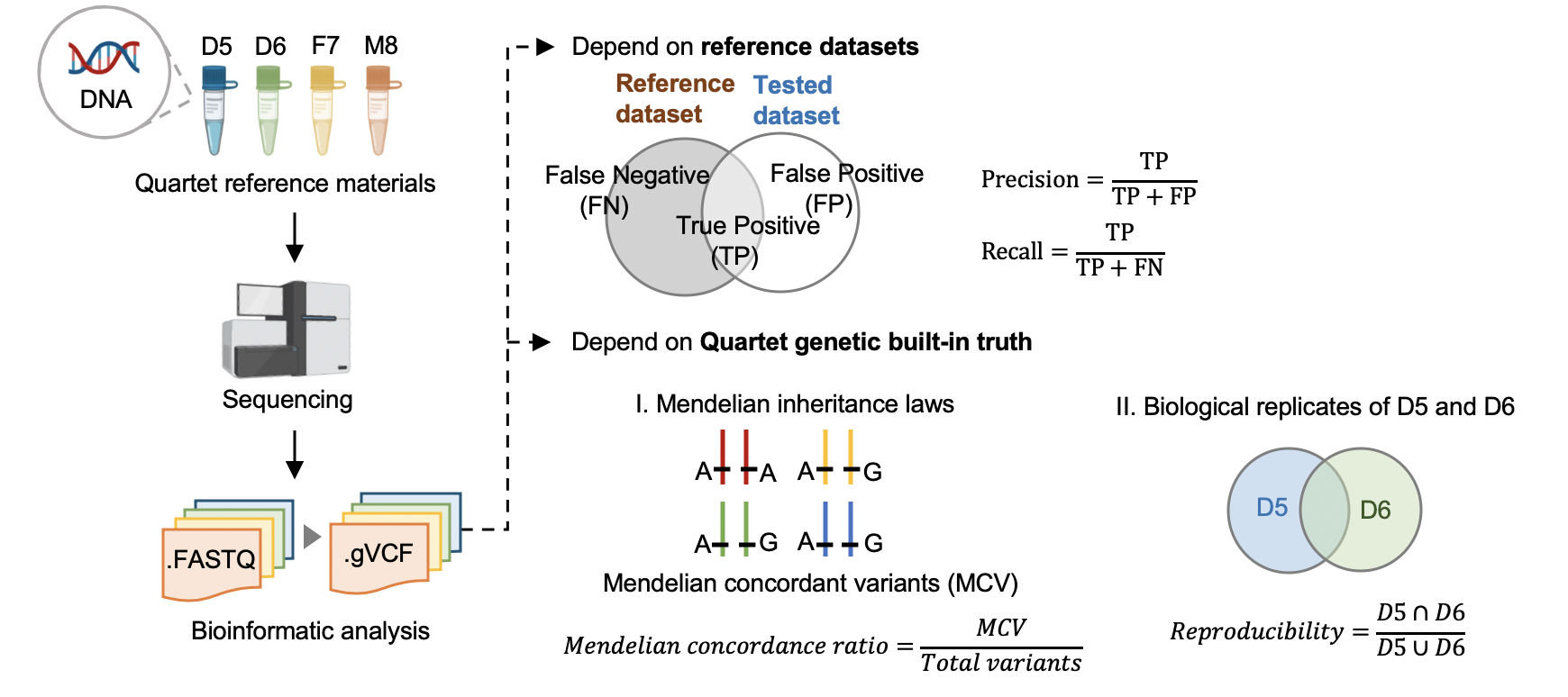

| We first established genomic DNA reference materials from four immortalized B-lymphoblastoid cell lines of a Chinese Quartet family including parents and monozygotic twin daughters to make performance assessment of germline variants calling results. To establish small variant benchmark calls and regions, we generated whole-genome sequencing data in nine batches, with depth ranging from 30x to 60x, by employing PCR-free and PCR libraries on four popular short-read sequencing platforms (Illumina HiSeq XTen, Illumina NovaSeq, MGISEQ-2000, and DNBSEQ-T7) with three replicates at each batch, resulting in 108 libraries in total and 27 libraries for each Quartet DNA reference material. Then, we selected variants concordant in multiple call sets and in Mendelian consistency within Quartet family members as small variant benchmark calls, resulting in 4.2 million high-confidence variants (SNV and Indel) and 2.66 G high confidence genomic region, covering 87.8% of the human reference genome (GRCh38, chr1-22 and X). Two orthogonal technologies were used for verifying the high-confidence variants. The consistency rate with PMRA (Axiom Precision Medicine Research Array) was 99.6%, and 95.9% of high-confidence variants were validated by 10X Genomics whole-genome sequencing data. Genetic built-in truth of the Quartet family design is another kind of “truth” within the four Quartet samples. Apart from comparison with benchmark calls in the benchmark regions to identify false-positive and false-negative variants, pedigree information among the Quartet DNA reference materials, i.e., reproducibility rate of variants between the twins and Mendelian concordance rate among family members, are complementary approaches to comprehensively estimate genome-wide variants calling performance. Finally, we developed a whole-genome sequencing data quality assessment pipeline and demonstrated its utilities with two examples of using the Quartet reference materials and datasets to evaluate data generation performance in three sequencing labs and different data analysis pipelines. | |||

| ### Quality control pipeline for WGS | |||

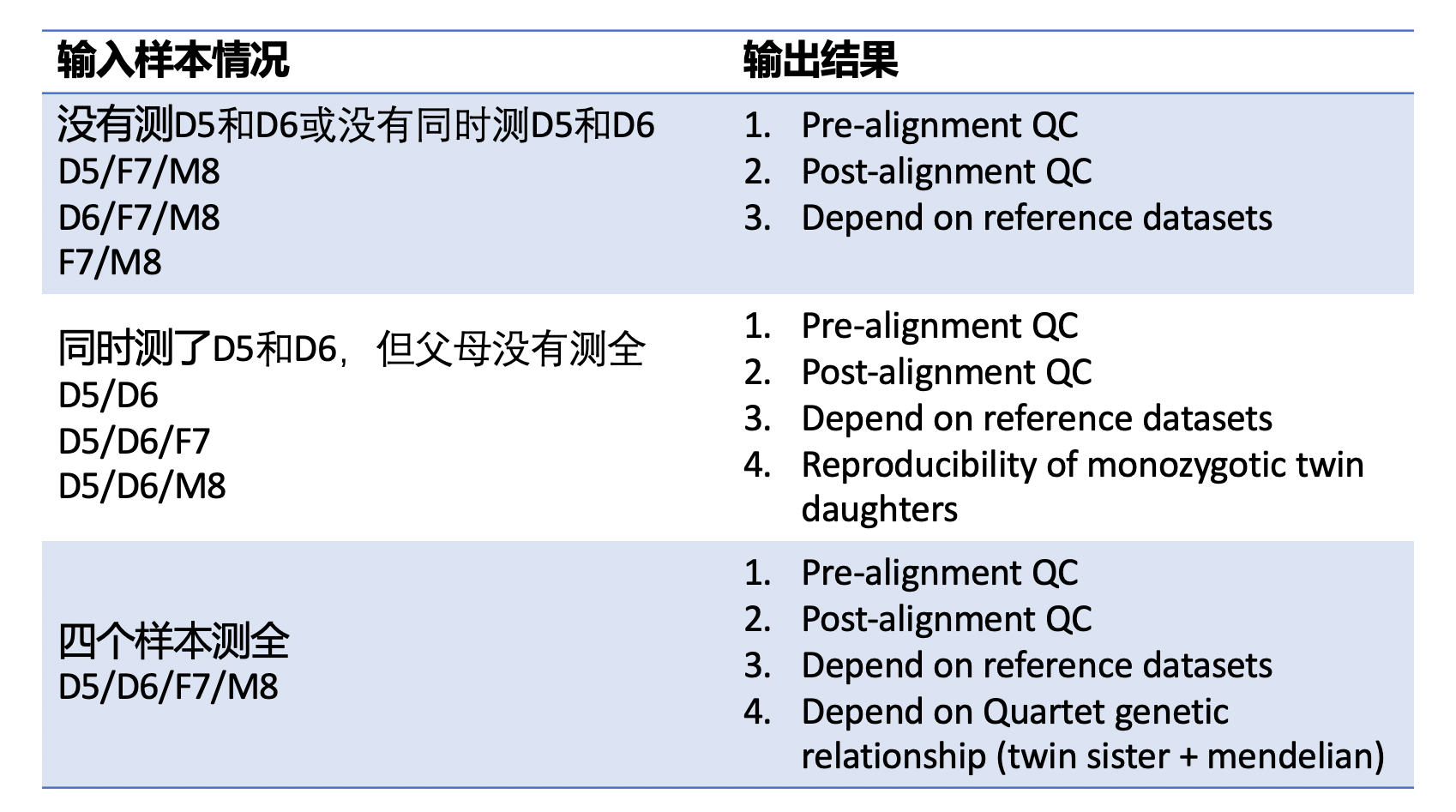

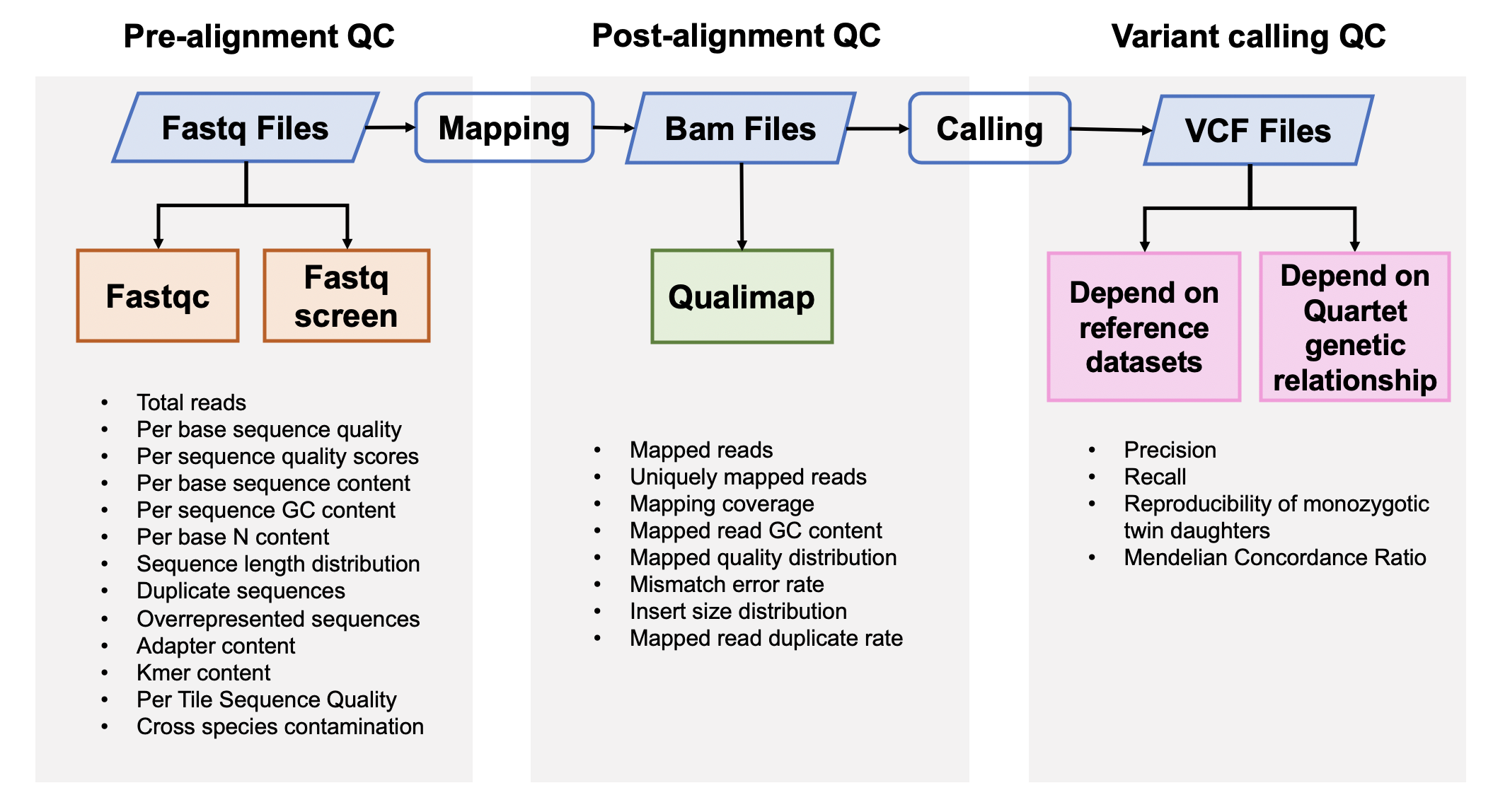

| This Quartet quality control pipeline evaluate the performance of reads quality and variant calling quality. This pipeline accepts FASTQ format input files or VCF format input files. If the users input FASTQ files, this APP will output the results of pre-alignment quality control from FASTQ files, post-alignment quality control from BAM files and variant calling quality control from VCF files. [GATK best practice pipelines](https://gatk.broadinstitute.org/hc/en-us/articles/360035535932-Germline-short-variant-discovery-SNPs-Indels-) (implemented by [SENTIEON software](https://support.sentieon.com/manual/)) were used to map reads to the reference genome and call variants. If the users input VCF files, this APP will only output the results of variant calling quality control. | |||

| Quartet quality control analysis pipeline started from FASTQ files is implemented across seven main procedures: | |||

| - Pre-alignment QC of FASTQ files | |||

| - Genome alignment | |||

| - Post-alignment QC of BAM files | |||

| - Germline variant calling | |||

| - Variant calling QC depended on benchmark sets of VCF files | |||

| - Check Mendelian ingeritance states across four Quartet samples of every variants | |||

| - Variant calling QC depended on Quartet genetic relationship of VCF files | |||

| Quartet quality control analysis pipeline started from VCF files is implemented across three main procedures: | |||

| - Variant calling QC depended on benchmark sets of VCF files | |||

| - Check Mendelian ingeritance states across four Quartet samples of every variants | |||

| - Variant calling QC depended on Quartet genetic relationship of VCF files | |||

|  | |||

| Results generated from this APP can be visualized by Choppy report. | |||

| ## Data Processing Steps | |||

| ### 1. Pre-alignment QC of FASTQ files | |||

| #### [Fastqc](<https://www.bioinformatics.babraham.ac.uk/projects/fastqc/>) v0.11.5 | |||

| [FastQC](<https://www.bioinformatics.babraham.ac.uk/projects/fastqc/Help/3%20Analysis%20Modules/>) is used to investigate the quality of fastq files | |||

| ```bash | |||

| fastqc -t <threads> -o <output_directory> <fastq_file> | |||

| ``` | |||

| #### [Fastq Screen](<https://www.bioinformatics.babraham.ac.uk/projects/fastq_screen/>) 0.12.0 | |||

| Fastq Screen is used to inspect whether the library were contaminated. For example, we expected 99% reads aligned to human genome, 10% reads aligned to mouse genome, which is partly homologous to human genome. If too many reads are aligned to E.Coli or Yeast, libraries or cell lines are probably comtminated. | |||

| ```bash | |||

| fastq_screen --aligner <aligner> --conf <config_file> --top <number_of_reads> --threads <threads> <fastq_file> | |||

| ``` | |||

| ### 2. Genome alignment | |||

| ####[sentieon-genomics](https://support.sentieon.com/manual/):v2019.11.28 | |||

| Reads were mapped to the human reference genome GRCh38 using Sentieon BWA. | |||

| ```bash | |||

| ${SENTIEON_INSTALL_DIR}/bin/bwa mem -M -R "@RG\tID:${group}\tSM:${sample}\tPL:${pl}" -t $nt -K 10000000 ${ref_dir}/${fasta} ${fastq_1} ${fastq_2} | ${SENTIEON_INSTALL_DIR}/bin/sentieon util sort -o ${sample}.sorted.bam -t $nt --sam2bam -i - | |||

| ``` | |||

| ### 3. Post-alignment QC | |||

| Qualimap and Paicard Tools (implemented by Sentieon) are used to check the quality of BAM files. Deduplicated BAM files are used in this step. | |||

| #### [Qualimap](<http://qualimap.bioinfo.cipf.es/>) 2.0.0 | |||

| ```bash | |||

| qualimap bamqc -bam <bam_file> -outformat PDF:HTML -nt <threads> -outdir <output_directory> --java-mem-size=32G | |||

| ``` | |||

| ####[Sentieon-genomics](https://support.sentieon.com/manual/):v2019.11.28 | |||

| ``` | |||

| ${SENTIEON_INSTALL_DIR}/bin/sentieon driver -r ${ref_dir}/${fasta} -t $nt -i ${Dedup_bam} --algo CoverageMetrics --omit_base_output ${sample}_deduped_coverage_metrics --algo MeanQualityByCycle ${sample}_deduped_mq_metrics.txt --algo QualDistribution ${sample}_deduped_qd_metrics.txt --algo GCBias --summary ${sample}_deduped_gc_summary.txt ${sample}_deduped_gc_metrics.txt --algo AlignmentStat ${sample}_deduped_aln_metrics.txt --algo InsertSizeMetricAlgo ${sample}_deduped_is_metrics.txt --algo QualityYield ${sample}_deduped_QualityYield.txt --algo WgsMetricsAlgo ${sample}_deduped_WgsMetricsAlgo.txt | |||

| ``` | |||

| ### 4. Germline variant calling | |||

| HaplotyperCaller implemented by Sentieon is used to identify germline variants. | |||

| ```bash | |||

| ${SENTIEON_INSTALL_DIR}/bin/sentieon driver -r ${ref_dir}/${fasta} -t $nt -i ${recaled_bam} --algo Haplotyper ${sample}_hc.vcf | |||

| ``` | |||

| ### 5. Variants Calling QC | |||

|  | |||

| #### 5.1 Performance assessment based on benchmark sets | |||

| #### [Hap.py](<https://github.com/Illumina/hap.py>) v0.3.9 | |||

| Variants were compared with benchmark calls in benchmark regions. | |||

| ```bash | |||

| hap.py <truth_vcf> <query_vcf> -f <bed_file> --threads <threads> -o <output_filename> | |||

| ``` | |||

| #### 5.2 Performance assessment based on Quartet genetic built-in truth | |||

| #### [VBT](https://github.com/sbg/VBT-TrioAnalysis) v1.1 | |||

| We splited the Quartet family to two trios (F7, M8, D5 and F7, M8, D6) and then do the Mendelian analysis. A Quartet Mendelian concordant variant is the same between the twins (D5 and D6) , and follow the Mendelian concordant between parents (F7 and M8). Mendelian concordance rate is the Mendelian concordance variant divided by total detected variants in a Quartet family. Only variants on chr1-22,X are included in this analysis. | |||

| ```bash | |||

| vbt mendelian -ref <fasta_file> -mother <family_merged_vcf> -father <family_merged_vcf> -child <family_merged_vcf> -pedigree <ped_file> -outDir <output_directory> -out-prefix <output_directory_prefix> --output-violation-regions -thread-count <threads> | |||

| ``` | |||

| ## Input files | |||

| ```bash | |||

| choppy samples renluyao/quartet_dna_quality_control_wgs_big_pipeline-latest --output samples | |||

| ``` | |||

| ####Samples CSV file | |||

| #### 1. Start from Fastq files | |||

| ```BASH | |||

| sample_id,project,fastq_1_D5,fastq_2_D5,fastq_1_D6,fastq_2_D6,fastq_1_F7,fastq_2_F7,fastq_1_M8,fastq_2_M8 | |||

| # sample_id in choppy system | |||

| # project name | |||

| # oss path of D5 fastq read1 file | |||

| # oss path of D5 fastq read2 file | |||

| # oss path of D6 fastq read1 file | |||

| # oss path of D6 fastq read2 file | |||

| # oss path of F7 fastq read1 file | |||

| # oss path of F7 fastq read2 file | |||

| # oss path of M8 fastq read1 file | |||

| # oss path of M8 fastq read2 file | |||

| ``` | |||

| #### 2. Start from VCF files | |||

| ```BASH | |||

| sample_id,project,vcf_D5,vcf_D6,vcf_F7,vcf_M8 | |||

| # sample_id in choppy system | |||

| # project name | |||

| # oss path of D5 VCF file | |||

| # oss path of D6 VCF file | |||

| # oss path of F7 VCF file | |||

| # oss path of M8 VCF file | |||

| ``` | |||

| ## Output Files | |||

| #### 1. extract_tables.wdl/extract_tables_vcf.wdl | |||

| (FASTQ) Pre-alignment QC: pre_alignment.txt | |||

| (FASTQ) Post-alignment QC: post_alignment.txt | |||

| (FASTQ/VCF) Variants calling QC: variants.calling.qc.txt | |||

| ####2. quartet_mendelian.wdl | |||

| (FASTQ/VCF) Variants calling QC: mendelian.txt | |||

| ## Output files format | |||

| ####1. pre_alignment.txt | |||

| | Column name | Description | | |||

| | ------------------------- | --------------------------------------------------------- | | |||

| | Sample | Sample name | | |||

| | %Dup | Percentage duplicate reads | | |||

| | %GC | Average GC percentage | | |||

| | Total Sequences (million) | Total sequences | | |||

| | %Human | Percentage of reads mapped to human genome | | |||

| | %EColi | Percentage of reads mapped to Ecoli | | |||

| | %Adapter | Percentage of reads mapped to adapter | | |||

| | %Vector | Percentage of reads mapped to vector | | |||

| | %rRNA | Percentage of reads mapped to rRNA | | |||

| | %Virus | Percentage of reads mapped to virus | | |||

| | %Yeast | Percentage of reads mapped to yeast | | |||

| | %Mitoch | Percentage of reads mapped to mitochondrion | | |||

| | %No hits | Percentage of reads not mapped to genomes mentioned above | | |||

| #### 2. post_alignment.txt | |||

| | Column name | Description | | |||

| | --------------------- | --------------------------------------------- | | |||

| | Sample | Sample name | | |||

| | %Mapping | Percentage of mapped reads | | |||

| | %Mismatch Rate | Mapping error rate | | |||

| | Mendelian Insert Size | Median insert size(bp) | | |||

| | %Q20 | Percentage of bases >Q20 | | |||

| | %Q30 | Percentage of bases >Q30 | | |||

| | Mean Coverage | Mean deduped coverage | | |||

| | Median Coverage | Median deduped coverage | | |||

| | PCT_1X | Fraction of genome with at least 1x coverage | | |||

| | PCT_5X | Fraction of genome with at least 5x coverage | | |||

| | PCT_10X | Fraction of genome with at least 10x coverage | | |||

| | PCT_20X | Fraction of genome with at least 20x coverage | | |||

| | PCT_30X | Fraction of genome with at least 30x coverage | | |||

| | Fold-80 | Fold-80 penalty | | |||

| | On target bases rate | On target bases rate | | |||

| ####3. variants.calling.qc.txt | |||

| | Column name | Description | | |||

| | --------------- | ------------------------------------------------------------ | | |||

| | Sample | Sample name | | |||

| | SNV number | Total SNV number (chr1-22,X) | | |||

| | INDEL number | Total INDEL number (chr1-22,X) | | |||

| | SNV query | SNV number in benchmark region | | |||

| | INDEL query | INDEL number in benchmark region | | |||

| | SNV TP | True positive SNV | | |||

| | INDEL TP | True positive INDEL | | |||

| | SNV FP | False positive SNV | | |||

| | INDEL FP | True positive INDEL | | |||

| | SNV FN | False negative SNV | | |||

| | INDEL FN | False negative INDEL | | |||

| | SNV precision | Precision of SNV calls when compared with benchmark calls in benchmark regions | | |||

| | INDEL precision | Precision of INDEL calls when compared with benchmark calls in benchmark regions | | |||

| | SNV recall | Recall of SNV calls when compared with benchmark calls in benchmark regions | | |||

| | INDEL recall | Recall of INDEL calls when compared with benchmark calls in benchmark regions | | |||

| | SNV F1 | F1 score of SNV calls when compared with benchmark calls in benchmark regions | | |||

| | INDEL F1 | F1 score of INDEL calls when compared with benchmark calls in benchmark regions | | |||

| ####4 {project}.summary.txt | |||

| | Column name | Description | | |||

| | ----------------------------- | ----------------------------------------------------------- | | |||

| | Family | Family name defined by inputed project name | | |||

| | Reproducibility_D5_D6 | Percentage of variants were shared by the twins (D5 and D6) | | |||

| | Mendelian_Concordance_Quartet | Percentage of variants were Mendelian concordance | | |||

BIN

codescripts/.DS_Store

Прегледај датотеку

+ 90

- 0

codescripts/D5_D6.py

Прегледај датотеку

| @@ -0,0 +1,90 @@ | |||

| from __future__ import division | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to calculate reproducibility between Quartet_D5 and Quartet_D6s") | |||

| parser.add_argument('-sister', '--sister', type=str, help='sister.txt', required=True) | |||

| parser.add_argument('-project', '--project', type=str, help='project name', required=True) | |||

| args = parser.parse_args() | |||

| sister_file = args.sister | |||

| project_name = args.project | |||

| # output file | |||

| output_name = project_name + '.sister.reproducibility.txt' | |||

| output_file = open(output_name,'w') | |||

| # input files | |||

| sister_dat = pd.read_table(sister_file) | |||

| indel_sister_same = 0 | |||

| indel_sister_diff = 0 | |||

| snv_sister_same = 0 | |||

| snv_sister_diff = 0 | |||

| for row in sister_dat.itertuples(): | |||

| # snv indel | |||

| if ',' in row[4]: | |||

| alt = row[4].split(',') | |||

| alt_len = [len(i) for i in alt] | |||

| alt_max = max(alt_len) | |||

| else: | |||

| alt_max = len(row[4]) | |||

| alt = alt_max | |||

| ref = row[3] | |||

| if len(ref) == 1 and alt == 1: | |||

| cate = 'SNV' | |||

| elif len(ref) > alt: | |||

| cate = 'INDEL' | |||

| elif alt > len(ref): | |||

| cate = 'INDEL' | |||

| elif len(ref) == alt: | |||

| cate = 'INDEL' | |||

| # sister | |||

| if row[5] == row[6]: | |||

| if row[5] == './.': | |||

| mendelian = 'noInfo' | |||

| sister_count = "no" | |||

| elif row[5] == '0/0': | |||

| mendelian = 'Ref' | |||

| sister_count = "no" | |||

| else: | |||

| mendelian = '1' | |||

| sister_count = "yes_same" | |||

| else: | |||

| mendelian = '0' | |||

| if (row[5] == './.' or row[5] == '0/0') and (row[6] == './.' or row[6] == '0/0'): | |||

| sister_count = "no" | |||

| else: | |||

| sister_count = "yes_diff" | |||

| if cate == 'SNV': | |||

| if sister_count == 'yes_same': | |||

| snv_sister_same += 1 | |||

| elif sister_count == 'yes_diff': | |||

| snv_sister_diff += 1 | |||

| else: | |||

| pass | |||

| elif cate == 'INDEL': | |||

| if sister_count == 'yes_same': | |||

| indel_sister_same += 1 | |||

| elif sister_count == 'yes_diff': | |||

| indel_sister_diff += 1 | |||

| else: | |||

| pass | |||

| indel_sister = indel_sister_same/(indel_sister_same + indel_sister_diff) | |||

| snv_sister = snv_sister_same/(snv_sister_same + snv_sister_diff) | |||

| outcolumn = 'Project\tReproducibility_D5_D6\n' | |||

| indel_outResult = project_name + '.INDEL' + '\t' + str(indel_sister) + '\n' | |||

| snv_outResult = project_name + '.SNV' + '\t' + str(snv_sister) + '\n' | |||

| output_file.write(outcolumn) | |||

| output_file.write(indel_outResult) | |||

| output_file.write(snv_outResult) | |||

+ 37

- 0

codescripts/Indel_bed.py

Прегледај датотеку

| @@ -0,0 +1,37 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| mut = pd.read_table('/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/MIE/vcf/mutation_type',header=None) | |||

| outIndel = open(sys.argv[1],'w') | |||

| for row in mut.itertuples(): | |||

| if ',' in row._4: | |||

| alt_seq = row._4.split(',') | |||

| alt_len = [len(i) for i in alt_seq] | |||

| alt = max(alt_len) | |||

| else: | |||

| alt = len(row._4) | |||

| ref = row._3 | |||

| pos = row._2 | |||

| if len(ref) == 1 and alt == 1: | |||

| pass | |||

| elif len(ref) > alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (len(ref) - 1) | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif alt > len(ref): | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif len(ref) == alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

+ 72

- 0

codescripts/bed_region.py

Прегледај датотеку

| @@ -0,0 +1,72 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| mut = mut = pd.read_table('/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/vcf/mutation_type',header=None) | |||

| vote = pd.read_table('/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/all_info/benchmark.vote.mendelian.txt',header=None) | |||

| merged_df = pd.merge(vote, mut, how='inner', left_on=[0,1], right_on = [0,1]) | |||

| outFile = open(sys.argv[1],'w') | |||

| outIndel = open(sys.argv[2],'w') | |||

| for row in merged_df.itertuples(): | |||

| #d5 | |||

| if ',' in row._7: | |||

| d5 = row._7.split(',') | |||

| d5_len = [len(i) for i in d5] | |||

| d5_alt = max(d5_len) | |||

| else: | |||

| d5_alt = len(row._7) | |||

| #d6 | |||

| if ',' in row._15: | |||

| d6 = row._15.split(',') | |||

| d6_len = [len(i) for i in d6] | |||

| d6_alt = max(d6_len) | |||

| else: | |||

| d6_alt = len(row._15) | |||

| #f7 | |||

| if ',' in row._23: | |||

| f7 = row._23.split(',') | |||

| f7_len = [len(i) for i in f7] | |||

| f7_alt = max(f7_len) | |||

| else: | |||

| f7_alt = len(row._23) | |||

| #m8 | |||

| if ',' in row._31: | |||

| m8 = row._31.split(',') | |||

| m8_len = [len(i) for i in m8] | |||

| m8_alt = max(m8_len) | |||

| else: | |||

| m8_alt = len(row._31) | |||

| all_length = [d5_alt,d6_alt,f7_alt,m8_alt] | |||

| alt = max(all_length) | |||

| ref = row._35 | |||

| pos = int(row._2) | |||

| if len(ref) == 1 and alt == 1: | |||

| StartPos = int(pos) -1 | |||

| EndPos = int(pos) | |||

| cate = 'SNV' | |||

| elif len(ref) > alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (len(ref) - 1) | |||

| cate = 'INDEL' | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif alt > len(ref): | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| elif len(ref) == alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| outline_indel = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\n' | |||

| outIndel.write(outline_indel) | |||

| outline = row._1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\t' + str(row._2) + '\t' + cate + '\n' | |||

| outFile.write(outline) | |||

+ 67

- 0

codescripts/cluster.sh

Прегледај датотеку

| @@ -0,0 +1,67 @@ | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$7"\t.\t.\t.\tGT\t"$6}' | grep -v '0/0' > LCL5.body | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$15"\t.\t.\t.\tGT\t"$14}' | grep -v '0/0' > LCL6.body | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$23"\t.\t.\t.\tGT\t"$22}' | grep -v '0/0'> LCL7.body | |||

| cat benchmark.men.vote.diffbed.lengthlessthan50.txt | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$31"\t.\t.\t.\tGT\t"$30}'| grep -v '0/0' > LCL8.body | |||

| cat header5 LCL5.body > LCL5.beforediffbed.vcf | |||

| cat header6 LCL6.body > LCL6.beforediffbed.vcf | |||

| cat header7 LCL7.body > LCL7.beforediffbed.vcf | |||

| cat header8 LCL8.body > LCL8.beforediffbed.vcf | |||

| rtg bgzip *beforediffbed.vcf | |||

| rtg index *beforediffbed.vcf.gz | |||

| rtg vcffilter -i LCL5.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL5.afterfilterdiffbed.vcf.gz | |||

| rtg vcffilter -i LCL6.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL6.afterfilterdiffbed.vcf.gz | |||

| rtg vcffilter -i LCL7.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL7.afterfilterdiffbed.vcf.gz | |||

| rtg vcffilter -i LCL8.beforediffbed.vcf.gz --exclude-bed=/mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed -o LCL8.afterfilterdiffbed.vcf.gz | |||

| /mnt/pgx_src_data_pool_4/home/renluyao/softwares/annovar/table_annovar.pl LCL5.beforediffbed.vcf.gz /mnt/pgx_src_data_pool_4/home/renluyao/softwares/annovar/humandb \ | |||

| -buildver hg38 \ | |||

| -out LCL5 \ | |||

| -remove \ | |||

| -protocol 1000g2015aug_all,1000g2015aug_afr,1000g2015aug_amr,1000g2015aug_eas,1000g2015aug_eur,1000g2015aug_sas,clinvar_20190305,gnomad211_genome \ | |||

| -operation f,f,f,f,f,f,f,f \ | |||

| -nastring . \ | |||

| -vcfinput \ | |||

| --thread 8 | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL5.afterfilterdiffbed.vcf.gz -o LCL5_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL6.afterfilterdiffbed.vcf.gz -o LCL6_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL7.afterfilterdiffbed.vcf.gz -o LCL7_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| rtg vcfeval -b /mnt/pgx_src_data_pool_4/home/renluyao/Quartet/GIAB/NA12878_HG001/HG001_GRCh38_GIAB_highconf_CG-IllFB-IllGATKHC-Ion-10X-SOLID_CHROM1-X_v.3.3.2_highconf_PGandRTGphasetransfer.vcf.gz -c LCL8.afterfilterdiffbed.vcf.gz -o LCL8_NIST -t /mnt/pgx_src_data_pool_4/home/renluyao/annotation/hg38/GRCh38.d1.vd1.sdf/ | |||

| zcat LCL5.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL6.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL7.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL8.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) == 1)) { print } }' | wc -l | |||

| zcat LCL5.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL6.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL7.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL8.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) < 11) && (length($5) > 1)) { print } }' | wc -l | |||

| zcat LCL5.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| zcat LCL6.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| zcat LCL7.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| zcat LCL8.afterfilterdiffbed.vcf.gz | grep -v '#' | awk '{ if ((length($4) == 1) && (length($5) > 10)) { print } }' | wc -l | |||

| bedtools subtract -a LCL5.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL5.27.homo_ref.consensus.filtereddiffbed.bed | |||

| bedtools subtract -a LCL6.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL6.27.homo_ref.consensus.filtereddiffbed.bed | |||

| bedtools subtract -a LCL7.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL7.27.homo_ref.consensus.filtereddiffbed.bed | |||

| bedtools subtract -a LCL8.27.homo_ref.consensus.bed -b /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/MIE/diff.merged.bed > LCL8.27.homo_ref.consensus.filtereddiffbed.bed | |||

| python vcf2bed.py LCL5.body LCL5.variants.bed | |||

| python vcf2bed.py LCL6.body LCL6.variants.bed | |||

| python vcf2bed.py LCL7.body LCL7.variants.bed | |||

| python vcf2bed.py LCL8.body LCL8.variants.bed | |||

| cat /mnt/pgx_src_data_pool_4/home/renluyao/manuscript/benchmark_calls/all_info/LCL5.variants.bed | cut -f1,11,12 | cat - LCL5.27.homo_ref.consensus.filtereddiffbed.bed | sort -k1,1 -k2,2n > LCL5.high.confidence.bed | |||

+ 102

- 0

codescripts/dna_data_summary.py

Прегледај датотеку

| @@ -0,0 +1,102 @@ | |||

| import pandas as pd | |||

| from functools import reduce | |||

| import sys, argparse, os | |||

| import glob | |||

| parser = argparse.ArgumentParser(description="This script is to get information from multiqc and sentieon, output the raw fastq, bam and variants calling (precision and recall) quality metrics") | |||

| parser.add_argument('-pre', '--pre_alignment_path', type=str, help='pre-alignment directory') | |||

| parser.add_argument('-post', '--post_alignment_path', type=str, help='post-alignment directory') | |||

| parser.add_argument('-vcf', '--benchmark_path', type=str, help='benchmark directory') | |||

| parser.add_argument('-men', '--mendelian_path', type=str, help='mendelian directory') | |||

| args = parser.parse_args() | |||

| # Rename input: | |||

| pre_alignment_path = args.pre_alignment_path | |||

| post_alignment_path = args.post_alignment_path | |||

| benchmark_path = args.benchmark_path | |||

| mendelian_path = args.mendelian_path | |||

| ###pre-alignment | |||

| pre_alignment_df = pd.concat(map(pd.read_table,glob.glob(os.path.join(pre_alignment_path,'*.txt')))) | |||

| pre_alignment_df.to_csv('pre-alignment-all.txt',sep="\t",index=0) | |||

| ###post-alignment | |||

| post_alignment_df = pd.concat(map(pd.read_table,glob.glob(os.path.join(post_alignment_path,'*.txt')))) | |||

| post_alignment_df.to_csv('post-alignment-all.txt',sep="\t",index=0) | |||

| ###variant-calling qc | |||

| ## detail | |||

| variant_calling_df = pd.concat(map(pd.read_table,glob.glob(os.path.join(benchmark_path,'*.txt')))) | |||

| variant_calling_df.to_csv('variant-calling-all.txt',sep="\t",index=0) | |||

| ## mean + sd | |||

| ####snv | |||

| a = variant_calling_df["SNV precision"].mean().round(2) | |||

| b = variant_calling_df["SNV precision"].std().round(2) | |||

| c = variant_calling_df["SNV recall"].mean().round(2) | |||

| d = variant_calling_df["SNV precision"].std().round(2) | |||

| variant_calling_df["SNV F1 score"] = 2 * variant_calling_df["SNV precision"] * variant_calling_df["SNV recall"] / (variant_calling_df["SNV precision"] + variant_calling_df["SNV recall"]) | |||

| e = variant_calling_df["SNV F1 score"].mean().round(2) | |||

| f = variant_calling_df["SNV F1 score"].std().round(2) | |||

| #### indel | |||

| a2 = variant_calling_df["INDEL precision"].mean().round(2) | |||

| b2 = variant_calling_df["INDEL precision"].std().round(2) | |||

| c2 = variant_calling_df["INDEL recall"].mean().round(2) | |||

| d2 = variant_calling_df["INDEL precision"].std().round(2) | |||

| variant_calling_df["INDEL F1 score"] = 2 * variant_calling_df["INDEL precision"] * variant_calling_df["INDEL recall"] / (variant_calling_df["INDEL precision"] + variant_calling_df["INDEL recall"]) | |||

| e2 = variant_calling_df["INDEL F1 score"].mean().round(2) | |||

| f2 = variant_calling_df["INDEL F1 score"].std().round(2) | |||

| data = {'precision':[str(a),str(a2)], 'precision_sd':[str(b),str(b2)], 'recall':[str(c),str(c2)], 'recall_sd':[str(d),str(d2)], 'F1-score':[str(e),str(e2)], 'F1-score_sd':[str(f),str(f2)] } | |||

| df = pd.DataFrame(data, index =['SNV', 'INDEL']) | |||

| df.to_csv('benchmark_summary.txt',sep="\t") | |||

| ### Mendelian | |||

| mendelian_df = pd.concat(map(pd.read_table,glob.glob(os.path.join(mendelian_path,'*.txt')))) | |||

| mendelian_df.to_csv('mendelian-all.txt',sep="\t",index=0) | |||

| ### snv | |||

| snv = mendelian_df[mendelian_df['Family'].str.contains("SNV")] | |||

| indel = mendelian_df[mendelian_df['Family'].str.contains("INDEL")] | |||

| g = snv["Mendelian_Concordance_Rate"].mean().round(2) | |||

| h = snv["Mendelian_Concordance_Rate"].std().round(2) | |||

| g2 = indel["Mendelian_Concordance_Rate"].mean().round(2) | |||

| h2 = indel["Mendelian_Concordance_Rate"].std().round(2) | |||

| data = {'MCR':[str(g),str(g2)], 'MCR_sd':[str(h),str(h2)]} | |||

| df = pd.DataFrame(data, index =['SNV', 'INDEL']) | |||

| df.to_csv('mendelian_summary.txt',sep="\t") | |||

+ 137

- 0

codescripts/extract_tables.py

Прегледај датотеку

| @@ -0,0 +1,137 @@ | |||

| import json | |||

| import pandas as pd | |||

| from functools import reduce | |||

| import sys, argparse, os | |||

| parser = argparse.ArgumentParser(description="This script is to get information from multiqc and sentieon, output the raw fastq, bam and variants calling (precision and recall) quality metrics") | |||

| parser.add_argument('-quality', '--quality_yield', type=str, help='*.quality_yield.txt') | |||

| parser.add_argument('-depth', '--wgs_metrics', type=str, help='*deduped_WgsMetricsAlgo.txt') | |||

| parser.add_argument('-aln', '--aln_metrics', type=str, help='*_deduped_aln_metrics.txt') | |||

| parser.add_argument('-is', '--is_metrics', type=str, help='*_deduped_is_metrics.txt') | |||

| parser.add_argument('-hs', '--hs_metrics', type=str, help='*_deduped_hs_metrics.txt') | |||

| parser.add_argument('-fastqc', '--fastqc', type=str, help='multiqc_fastqc.txt') | |||

| parser.add_argument('-fastqscreen', '--fastqscreen', type=str, help='multiqc_fastq_screen.txt') | |||

| parser.add_argument('-hap', '--happy', type=str, help='multiqc_happy_data.json', required=True) | |||

| parser.add_argument('-project', '--project_name', type=str, help='project_name') | |||

| args = parser.parse_args() | |||

| if args.quality_yield: | |||

| # Rename input: | |||

| quality_yield_file = args.quality_yield | |||

| wgs_metrics_file = args.wgs_metrics | |||

| aln_metrics_file = args.aln_metrics | |||

| is_metrics_file = args.is_metrics | |||

| fastqc_file = args.fastqc | |||

| fastqscreen_file = args.fastqscreen | |||

| hap_file = args.happy | |||

| project_name = args.project_name | |||

| ############################################# | |||

| # fastqc | |||

| fastqc = pd.read_table(fastqc_file) | |||

| # fastqscreen | |||

| dat = pd.read_table(fastqscreen_file) | |||

| fastqscreen = dat.loc[:, dat.columns.str.endswith('percentage')] | |||

| dat['Sample'] = [i.replace('_screen','') for i in dat['Sample']] | |||

| fastqscreen.insert(loc=0, column='Sample', value=dat['Sample']) | |||

| # pre-alignment | |||

| pre_alignment_dat = pd.merge(fastqc,fastqscreen,how="outer",left_on=['Sample'],right_on=['Sample']) | |||

| pre_alignment_dat['FastQC_mqc-generalstats-fastqc-total_sequences'] = pre_alignment_dat['FastQC_mqc-generalstats-fastqc-total_sequences']/1000000 | |||

| del pre_alignment_dat['FastQC_mqc-generalstats-fastqc-percent_fails'] | |||

| del pre_alignment_dat['FastQC_mqc-generalstats-fastqc-avg_sequence_length'] | |||

| del pre_alignment_dat['ERCC percentage'] | |||

| del pre_alignment_dat['Phix percentage'] | |||

| del pre_alignment_dat['Mouse percentage'] | |||

| pre_alignment_dat = pre_alignment_dat.round(2) | |||

| pre_alignment_dat.columns = ['Sample','%Dup','%GC','Total Sequences (million)','%Human','%EColi','%Adapter','%Vector','%rRNA','%Virus','%Yeast','%Mitoch','%No hits'] | |||

| pre_alignment_dat.to_csv('pre_alignment.txt',sep="\t",index=0) | |||

| ############################ | |||

| dat = pd.read_table(aln_metrics_file,index_col=False) | |||

| dat['PCT_ALIGNED_READS'] = dat["PF_READS_ALIGNED"]/dat["TOTAL_READS"] | |||

| aln_metrics = dat[["Sample", "PCT_ALIGNED_READS","PF_MISMATCH_RATE"]] | |||

| aln_metrics = aln_metrics * 100 | |||

| aln_metrics['Sample'] = [x[-1] for x in aln_metrics['Sample'].str.split('/')] | |||

| dat = pd.read_table(is_metrics_file,index_col=False) | |||

| is_metrics = dat[['Sample', 'MEDIAN_INSERT_SIZE']] | |||

| is_metrics['Sample'] = [x[-1] for x in is_metrics['Sample'].str.split('/')] | |||

| dat = pd.read_table(quality_yield_file,index_col=False) | |||

| dat['%Q20'] = dat['Q20_BASES']/dat['TOTAL_BASES'] | |||

| dat['%Q30'] = dat['Q30_BASES']/dat['TOTAL_BASES'] | |||

| quality_yield = dat[['Sample','%Q20','%Q30']] | |||

| quality_yield = quality_yield * 100 | |||

| quality_yield['Sample'] = [x[-1] for x in quality_yield['Sample'].str.split('/')] | |||

| dat = pd.read_table(wgs_metrics_file,index_col=False) | |||

| wgs_metrics = dat[['Sample','MEDIAN_COVERAGE','PCT_1X', 'PCT_5X', 'PCT_10X','PCT_20X','PCT_30X']] | |||

| wgs_metrics['PCT_1X'] = wgs_metrics['PCT_1X'] * 100 | |||

| wgs_metrics['PCT_5X'] = wgs_metrics['PCT_5X'] * 100 | |||

| wgs_metrics['PCT_10X'] = wgs_metrics['PCT_10X'] * 100 | |||

| wgs_metrics['PCT_20X'] = wgs_metrics['PCT_20X'] * 100 | |||

| wgs_metrics['PCT_30X'] = wgs_metrics['PCT_30X'] * 100 | |||

| wgs_metrics['Sample'] = [x[-1] for x in wgs_metrics['Sample'].str.split('/')] | |||

| hs_metrics = dat[['Sample','FOLD_80_BASE_PENALTY','PCT_USABLE_BASES_ON_TARGET']] | |||

| data_frames = [aln_metrics, is_metrics, quality_yield, wgs_metrics, hs_metrics] | |||

| post_alignment_dat = reduce(lambda left,right: pd.merge(left,right,on=['Sample'],how='outer'), data_frames) | |||

| post_alignment_dat.columns = ['Sample', '%Mapping', '%Mismatch Rate', 'Mendelian Insert Size','%Q20', '%Q30', 'Median Coverage', 'PCT_1X', 'PCT_5X', 'PCT_10X','PCT_20X','PCT_30X','Fold-80','On target bases rate'] | |||

| post_alignment_dat = post_alignment_dat.round(2) | |||

| post_alignment_dat.to_csv('post_alignment.txt',sep="\t",index=0) | |||

| ######################################### | |||

| # variants calling | |||

| with open(hap_file) as hap_json: | |||

| happy = json.load(hap_json) | |||

| dat =pd.DataFrame.from_records(happy) | |||

| dat = dat.loc[:, dat.columns.str.endswith('ALL')] | |||

| dat_transposed = dat.T | |||

| dat_transposed = dat_transposed.loc[:,['sample_id','QUERY.TOTAL','METRIC.Precision','METRIC.Recall']] | |||

| indel = dat_transposed[['INDEL' in s for s in dat_transposed.index]] | |||

| snv = dat_transposed[['SNP' in s for s in dat_transposed.index]] | |||

| indel.reset_index(drop=True, inplace=True) | |||

| snv.reset_index(drop=True, inplace=True) | |||

| benchmark = pd.concat([snv, indel], axis=1) | |||

| benchmark = benchmark[["sample_id", 'QUERY.TOTAL', 'METRIC.Precision', 'METRIC.Recall']] | |||

| benchmark.columns = ['Sample','sample_id','SNV number','INDEL number','SNV precision','INDEL precision','SNV recall','INDEL recall'] | |||

| benchmark = benchmark[['Sample','SNV number','INDEL number','SNV precision','INDEL precision','SNV recall','INDEL recall']] | |||

| benchmark['SNV precision'] = benchmark['SNV precision'].astype(float) | |||

| benchmark['INDEL precision'] = benchmark['INDEL precision'].astype(float) | |||

| benchmark['SNV recall'] = benchmark['SNV recall'].astype(float) | |||

| benchmark['INDEL recall'] = benchmark['INDEL recall'].astype(float) | |||

| benchmark['SNV precision'] = benchmark['SNV precision'] * 100 | |||

| benchmark['INDEL precision'] = benchmark['INDEL precision'] * 100 | |||

| benchmark['SNV recall'] = benchmark['SNV recall'] * 100 | |||

| benchmark['INDEL recall'] = benchmark['INDEL recall']* 100 | |||

| benchmark = benchmark.round(2) | |||

| benchmark.to_csv('variants.calling.qc.txt',sep="\t",index=0) | |||

| else: | |||

| hap_file = args.happy | |||

| with open(hap_file) as hap_json: | |||

| happy = json.load(hap_json) | |||

| dat =pd.DataFrame.from_records(happy) | |||

| dat = dat.loc[:, dat.columns.str.endswith('ALL')] | |||

| dat_transposed = dat.T | |||

| dat_transposed = dat_transposed.loc[:,['sample_id','QUERY.TOTAL','METRIC.Precision','METRIC.Recall']] | |||

| indel = dat_transposed[['INDEL' in s for s in dat_transposed.index]] | |||

| snv = dat_transposed[['SNP' in s for s in dat_transposed.index]] | |||

| indel.reset_index(drop=True, inplace=True) | |||

| snv.reset_index(drop=True, inplace=True) | |||

| benchmark = pd.concat([snv, indel], axis=1) | |||

| benchmark = benchmark[["sample_id", 'QUERY.TOTAL', 'METRIC.Precision', 'METRIC.Recall']] | |||

| benchmark.columns = ['Sample','sample_id','SNV number','INDEL number','SNV precision','INDEL precision','SNV recall','INDEL recall'] | |||

| benchmark = benchmark[['Sample','SNV number','INDEL number','SNV precision','INDEL precision','SNV recall','INDEL recall']] | |||

| benchmark['SNV precision'] = benchmark['SNV precision'].astype(float) | |||

| benchmark['INDEL precision'] = benchmark['INDEL precision'].astype(float) | |||

| benchmark['SNV recall'] = benchmark['SNV recall'].astype(float) | |||

| benchmark['INDEL recall'] = benchmark['INDEL recall'].astype(float) | |||

| benchmark['SNV precision'] = benchmark['SNV precision'] * 100 | |||

| benchmark['INDEL precision'] = benchmark['INDEL precision'] * 100 | |||

| benchmark['SNV recall'] = benchmark['SNV recall'] * 100 | |||

| benchmark['INDEL recall'] = benchmark['INDEL recall']* 100 | |||

| benchmark = benchmark.round(2) | |||

| benchmark.to_csv('variants.calling.qc.txt',sep="\t",index=0) | |||

+ 92

- 0

codescripts/extract_vcf_information.py

Прегледај датотеку

| @@ -0,0 +1,92 @@ | |||

| import sys,getopt | |||

| import os | |||

| import re | |||

| import fileinput | |||

| import pandas as pd | |||

| def usage(): | |||

| print( | |||

| """ | |||

| Usage: python extract_vcf_information.py -i input_merged_vcf_file -o parsed_file | |||

| This script will extract SNVs and Indels information from the vcf files and output a tab-delimited files. | |||

| Input: | |||

| -i the selected vcf file | |||

| Output: | |||

| -o tab-delimited parsed file | |||

| """) | |||

| # select supported small variants | |||

| def process(oneLine): | |||

| line = oneLine.rstrip() | |||

| strings = line.strip().split('\t') | |||

| infoParsed = parse_INFO(strings[7]) | |||

| formatKeys = strings[8].split(':') | |||

| formatValues = strings[9].split(':') | |||

| for i in range(0,len(formatKeys) -1) : | |||

| if formatKeys[i] == 'AD': | |||

| ra = formatValues[i].split(',') | |||

| infoParsed['RefDP'] = ra[0] | |||

| infoParsed['AltDP'] = ra[1] | |||

| if (int(ra[1]) + int(ra[0])) != 0: | |||

| infoParsed['af'] = float(int(ra[1])/(int(ra[1]) + int(ra[0]))) | |||

| else: | |||

| pass | |||

| else: | |||

| infoParsed[formatKeys[i]] = formatValues[i] | |||

| infoParsed['chromo'] = strings[0] | |||

| infoParsed['pos'] = strings[1] | |||

| infoParsed['id'] = strings[2] | |||

| infoParsed['ref'] = strings[3] | |||

| infoParsed['alt'] = strings[4] | |||

| infoParsed['qual'] = strings[5] | |||

| return infoParsed | |||

| def parse_INFO(info): | |||

| strings = info.strip().split(';') | |||

| keys = [] | |||

| values = [] | |||

| for i in strings: | |||

| kv = i.split('=') | |||

| if kv[0] == 'DB': | |||

| keys.append('DB') | |||

| values.append('1') | |||

| elif kv[0] == 'AF': | |||

| pass | |||

| else: | |||

| keys.append(kv[0]) | |||

| values.append(kv[1]) | |||

| infoDict = dict(zip(keys, values)) | |||

| return infoDict | |||

| opts,args = getopt.getopt(sys.argv[1:],"hi:o:") | |||

| for op,value in opts: | |||

| if op == "-i": | |||

| inputFile=value | |||

| elif op == "-o": | |||

| outputFile=value | |||

| elif op == "-h": | |||

| usage() | |||

| sys.exit() | |||

| if len(sys.argv[1:]) < 3: | |||

| usage() | |||

| sys.exit() | |||

| allDict = [] | |||

| for line in fileinput.input(inputFile): | |||

| m = re.match('^\#',line) | |||

| if m is not None: | |||

| pass | |||

| else: | |||

| oneDict = process(line) | |||

| allDict.append(oneDict) | |||

| allTable = pd.DataFrame(allDict) | |||

| allTable.to_csv(outputFile,sep='\t',index=False) | |||

+ 47

- 0

codescripts/filter_indel_over_50_cluster.py

Прегледај датотеку

| @@ -0,0 +1,47 @@ | |||

| import sys,getopt | |||

| from itertools import islice | |||

| over_50_outfile = open("indel_lenth_over_50.txt",'w') | |||

| less_50_outfile = open("benchmark.men.vote.diffbed.lengthlessthan50.txt","w") | |||

| def process(line): | |||

| strings = line.strip().split('\t') | |||

| #d5 | |||

| if ',' in strings[6]: | |||

| d5 = strings[6].split(',') | |||

| d5_len = [len(i) for i in d5] | |||

| d5_alt = max(d5_len) | |||

| else: | |||

| d5_alt = len(strings[6]) | |||

| #d6 | |||

| if ',' in strings[14]: | |||

| d6 = strings[14].split(',') | |||

| d6_len = [len(i) for i in d6] | |||

| d6_alt = max(d6_len) | |||

| else: | |||

| d6_alt = len(strings[14]) | |||

| #f7 | |||

| if ',' in strings[22]: | |||

| f7 = strings[22].split(',') | |||

| f7_len = [len(i) for i in f7] | |||

| f7_alt = max(f7_len) | |||

| else: | |||

| f7_alt = len(strings[22]) | |||

| #m8 | |||

| if ',' in strings[30]: | |||

| m8 = strings[30].split(',') | |||

| m8_len = [len(i) for i in m8] | |||

| m8_alt = max(m8_len) | |||

| else: | |||

| m8_alt = len(strings[30]) | |||

| #ref | |||

| ref_len = len(strings[34]) | |||

| if (d5_alt > 50) or (d6_alt > 50) or (f7_alt > 50) or (m8_alt > 50) or (ref_len > 50): | |||

| over_50_outfile.write(line) | |||

| else: | |||

| less_50_outfile.write(line) | |||

| input_file = open(sys.argv[1]) | |||

| for line in islice(input_file, 1, None): | |||

| process(line) | |||

+ 43

- 0

codescripts/filter_indel_over_50_mendelian.py

Прегледај датотеку

| @@ -0,0 +1,43 @@ | |||

| from itertools import islice | |||

| import fileinput | |||

| import sys, argparse, os | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to exclude indel over 50bp") | |||

| parser.add_argument('-i', '--mergedGVCF', type=str, help='merged gVCF txt with only chr, pos, ref, alt and genotypes', required=True) | |||

| parser.add_argument('-prefix', '--prefix', type=str, help='prefix of output file', required=True) | |||

| args = parser.parse_args() | |||

| input_dat = args.mergedGVCF | |||

| prefix = args.prefix | |||

| # output file | |||

| output_name = prefix + '.indel.lessthan50bp.txt' | |||

| outfile = open(output_name,'w') | |||

| def process(line): | |||

| strings = line.strip().split('\t') | |||

| #d5 | |||

| if ',' in strings[3]: | |||

| alt = strings[3].split(',') | |||

| alt_len = [len(i) for i in alt] | |||

| alt_max = max(alt_len) | |||

| else: | |||

| alt_max = len(strings[3]) | |||

| #ref | |||

| ref_len = len(strings[2]) | |||

| if (alt_max > 50) or (ref_len > 50): | |||

| pass | |||

| else: | |||

| outfile.write(line) | |||

| for line in fileinput.input(input_dat): | |||

| process(line) | |||

+ 416

- 0

codescripts/high_confidence_call_vote.py

Прегледај датотеку

| @@ -0,0 +1,416 @@ | |||

| from __future__ import division | |||

| import sys, argparse, os | |||

| import fileinput | |||

| import re | |||

| import pandas as pd | |||

| from operator import itemgetter | |||

| from collections import Counter | |||

| from itertools import islice | |||

| from numpy import * | |||

| import statistics | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to count voting number") | |||

| parser.add_argument('-vcf', '--multi_sample_vcf', type=str, help='The VCF file you want to count the voting number', required=True) | |||

| parser.add_argument('-dup', '--dup_list', type=str, help='Duplication list', required=True) | |||

| parser.add_argument('-sample', '--sample_name', type=str, help='which sample of quartet', required=True) | |||

| parser.add_argument('-prefix', '--prefix', type=str, help='Prefix of output file name', required=True) | |||

| args = parser.parse_args() | |||

| multi_sample_vcf = args.multi_sample_vcf | |||

| dup_list = args.dup_list | |||

| prefix = args.prefix | |||

| sample_name = args.sample_name | |||

| vcf_header = '''##fileformat=VCFv4.2 | |||

| ##fileDate=20200331 | |||

| ##source=high_confidence_calls_intergration(choppy app) | |||

| ##reference=GRCh38.d1.vd1 | |||

| ##INFO=<ID=location,Number=1,Type=String,Description="Repeat region"> | |||

| ##INFO=<ID=DETECTED,Number=1,Type=Integer,Description="Number of detected votes"> | |||

| ##INFO=<ID=VOTED,Number=1,Type=Integer,Description="Number of consnesus votes"> | |||

| ##INFO=<ID=FAM,Number=1,Type=Integer,Description="Number mendelian consisitent votes"> | |||

| ##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype"> | |||

| ##FORMAT=<ID=DP,Number=1,Type=Integer,Description="Sum depth of all samples"> | |||

| ##FORMAT=<ID=ALT,Number=1,Type=Integer,Description="Sum alternative depth of all samples"> | |||

| ##FORMAT=<ID=AF,Number=1,Type=Float,Description="Allele frequency, sum alternative depth / sum depth"> | |||

| ##FORMAT=<ID=GQ,Number=1,Type=Float,Description="Average genotype quality"> | |||

| ##FORMAT=<ID=QD,Number=1,Type=Float,Description="Average Variant Confidence/Quality by Depth"> | |||

| ##FORMAT=<ID=MQ,Number=1,Type=Float,Description="Average mapping quality"> | |||

| ##FORMAT=<ID=FS,Number=1,Type=Float,Description="Average Phred-scaled p-value using Fisher's exact test to detect strand bias"> | |||

| ##FORMAT=<ID=QUALI,Number=1,Type=Float,Description="Average variant quality"> | |||

| ##contig=<ID=chr1,length=248956422> | |||

| ##contig=<ID=chr2,length=242193529> | |||

| ##contig=<ID=chr3,length=198295559> | |||

| ##contig=<ID=chr4,length=190214555> | |||

| ##contig=<ID=chr5,length=181538259> | |||

| ##contig=<ID=chr6,length=170805979> | |||

| ##contig=<ID=chr7,length=159345973> | |||

| ##contig=<ID=chr8,length=145138636> | |||

| ##contig=<ID=chr9,length=138394717> | |||

| ##contig=<ID=chr10,length=133797422> | |||

| ##contig=<ID=chr11,length=135086622> | |||

| ##contig=<ID=chr12,length=133275309> | |||

| ##contig=<ID=chr13,length=114364328> | |||

| ##contig=<ID=chr14,length=107043718> | |||

| ##contig=<ID=chr15,length=101991189> | |||

| ##contig=<ID=chr16,length=90338345> | |||

| ##contig=<ID=chr17,length=83257441> | |||

| ##contig=<ID=chr18,length=80373285> | |||

| ##contig=<ID=chr19,length=58617616> | |||

| ##contig=<ID=chr20,length=64444167> | |||

| ##contig=<ID=chr21,length=46709983> | |||

| ##contig=<ID=chr22,length=50818468> | |||

| ##contig=<ID=chrX,length=156040895> | |||

| ''' | |||

| vcf_header_all_sample = '''##fileformat=VCFv4.2 | |||

| ##fileDate=20200331 | |||

| ##reference=GRCh38.d1.vd1 | |||

| ##INFO=<ID=location,Number=1,Type=String,Description="Repeat region"> | |||

| ##INFO=<ID=DUP,Number=1,Type=Flag,Description="Duplicated variant records"> | |||

| ##INFO=<ID=DETECTED,Number=1,Type=Integer,Description="Number of detected votes"> | |||

| ##INFO=<ID=VOTED,Number=1,Type=Integer,Description="Number of consnesus votes"> | |||

| ##INFO=<ID=FAM,Number=1,Type=Integer,Description="Number mendelian consisitent votes"> | |||

| ##INFO=<ID=ALL_ALT,Number=1,Type=Float,Description="Sum of alternative reads of all samples"> | |||

| ##INFO=<ID=ALL_DP,Number=1,Type=Float,Description="Sum of depth of all samples"> | |||

| ##INFO=<ID=ALL_AF,Number=1,Type=Float,Description="Allele frequency of net alternatice reads and net depth"> | |||

| ##INFO=<ID=GQ_MEAN,Number=1,Type=Float,Description="Mean of genotype quality of all samples"> | |||

| ##INFO=<ID=QD_MEAN,Number=1,Type=Float,Description="Average Variant Confidence/Quality by Depth"> | |||

| ##INFO=<ID=MQ_MEAN,Number=1,Type=Float,Description="Mean of mapping quality of all samples"> | |||

| ##INFO=<ID=FS_MEAN,Number=1,Type=Float,Description="Average Phred-scaled p-value using Fisher's exact test to detect strand bias"> | |||

| ##INFO=<ID=QUAL_MEAN,Number=1,Type=Float,Description="Average variant quality"> | |||

| ##INFO=<ID=PCR,Number=1,Type=String,Description="Consensus of PCR votes"> | |||

| ##INFO=<ID=PCR_FREE,Number=1,Type=String,Description="Consensus of PCR-free votes"> | |||

| ##INFO=<ID=CONSENSUS,Number=1,Type=String,Description="Consensus calls"> | |||

| ##INFO=<ID=CONSENSUS_SEQ,Number=1,Type=String,Description="Consensus sequence"> | |||

| ##FORMAT=<ID=GT,Number=1,Type=String,Description="Genotype"> | |||

| ##FORMAT=<ID=DP,Number=1,Type=String,Description="Depth"> | |||

| ##FORMAT=<ID=ALT,Number=1,Type=Integer,Description="Alternative Depth"> | |||

| ##FORMAT=<ID=AF,Number=1,Type=String,Description="Allele frequency"> | |||

| ##FORMAT=<ID=GQ,Number=1,Type=String,Description="Genotype quality"> | |||

| ##FORMAT=<ID=MQ,Number=1,Type=String,Description="Mapping quality"> | |||

| ##FORMAT=<ID=TWINS,Number=1,Type=String,Description="1 is twins shared, 0 is twins discordant "> | |||

| ##FORMAT=<ID=TRIO5,Number=1,Type=String,Description="1 is LCL7, LCL8 and LCL5 mendelian consistent, 0 is mendelian vioaltion"> | |||

| ##FORMAT=<ID=TRIO6,Number=1,Type=String,Description="1 is LCL7, LCL8 and LCL6 mendelian consistent, 0 is mendelian vioaltion"> | |||

| ##contig=<ID=chr1,length=248956422> | |||

| ##contig=<ID=chr2,length=242193529> | |||

| ##contig=<ID=chr3,length=198295559> | |||

| ##contig=<ID=chr4,length=190214555> | |||

| ##contig=<ID=chr5,length=181538259> | |||

| ##contig=<ID=chr6,length=170805979> | |||

| ##contig=<ID=chr7,length=159345973> | |||

| ##contig=<ID=chr8,length=145138636> | |||

| ##contig=<ID=chr9,length=138394717> | |||

| ##contig=<ID=chr10,length=133797422> | |||

| ##contig=<ID=chr11,length=135086622> | |||

| ##contig=<ID=chr12,length=133275309> | |||

| ##contig=<ID=chr13,length=114364328> | |||

| ##contig=<ID=chr14,length=107043718> | |||

| ##contig=<ID=chr15,length=101991189> | |||

| ##contig=<ID=chr16,length=90338345> | |||

| ##contig=<ID=chr17,length=83257441> | |||

| ##contig=<ID=chr18,length=80373285> | |||

| ##contig=<ID=chr19,length=58617616> | |||

| ##contig=<ID=chr20,length=64444167> | |||

| ##contig=<ID=chr21,length=46709983> | |||

| ##contig=<ID=chr22,length=50818468> | |||

| ##contig=<ID=chrX,length=156040895> | |||

| ''' | |||

| # read in duplication list | |||

| dup = pd.read_table(dup_list,header=None) | |||

| var_dup = dup[0].tolist() | |||

| # output file | |||

| benchmark_file_name = prefix + '_voted.vcf' | |||

| benchmark_outfile = open(benchmark_file_name,'w') | |||

| all_sample_file_name = prefix + '_all_sample_information.vcf' | |||

| all_sample_outfile = open(all_sample_file_name,'w') | |||

| # write VCF | |||

| outputcolumn = '#CHROM\tPOS\tID\tREF\tALT\tQUAL\tFILTER\tINFO\tFORMAT\t' + sample_name + '_benchmark_calls\n' | |||

| benchmark_outfile.write(vcf_header) | |||

| benchmark_outfile.write(outputcolumn) | |||

| outputcolumn_all_sample = '#CHROM\tPOS\tID\tREF\tALT\tQUAL\tFILTER\tINFO\tFORMAT\t'+ \ | |||

| 'Quartet_DNA_BGI_SEQ2000_BGI_1_20180518\tQuartet_DNA_BGI_SEQ2000_BGI_2_20180530\tQuartet_DNA_BGI_SEQ2000_BGI_3_20180530\t' + \ | |||

| 'Quartet_DNA_BGI_T7_WGE_1_20191105\tQuartet_DNA_BGI_T7_WGE_2_20191105\tQuartet_DNA_BGI_T7_WGE_3_20191105\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_ARD_1_20181108\tQuartet_DNA_ILM_Nova_ARD_2_20181108\tQuartet_DNA_ILM_Nova_ARD_3_20181108\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_ARD_4_20190111\tQuartet_DNA_ILM_Nova_ARD_5_20190111\tQuartet_DNA_ILM_Nova_ARD_6_20190111\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_BRG_1_20180930\tQuartet_DNA_ILM_Nova_BRG_2_20180930\tQuartet_DNA_ILM_Nova_BRG_3_20180930\t' + \ | |||

| 'Quartet_DNA_ILM_Nova_WUX_1_20190917\tQuartet_DNA_ILM_Nova_WUX_2_20190917\tQuartet_DNA_ILM_Nova_WUX_3_20190917\t' + \ | |||

| 'Quartet_DNA_ILM_XTen_ARD_1_20170403\tQuartet_DNA_ILM_XTen_ARD_2_20170403\tQuartet_DNA_ILM_XTen_ARD_3_20170403\t' + \ | |||

| 'Quartet_DNA_ILM_XTen_NVG_1_20170329\tQuartet_DNA_ILM_XTen_NVG_2_20170329\tQuartet_DNA_ILM_XTen_NVG_3_20170329\t' + \ | |||

| 'Quartet_DNA_ILM_XTen_WUX_1_20170216\tQuartet_DNA_ILM_XTen_WUX_2_20170216\tQuartet_DNA_ILM_XTen_WUX_3_20170216\n' | |||

| all_sample_outfile.write(vcf_header_all_sample) | |||

| all_sample_outfile.write(outputcolumn_all_sample) | |||

| #function | |||

| def replace_nan(strings_list): | |||

| updated_list = [] | |||

| for i in strings_list: | |||

| if i == '.': | |||

| updated_list.append('.:.:.:.:.:.:.:.:.:.:.:.') | |||

| else: | |||

| updated_list.append(i) | |||

| return updated_list | |||

| def remove_dot(strings_list): | |||

| updated_list = [] | |||

| for i in strings_list: | |||

| if i == '.': | |||

| pass | |||

| else: | |||

| updated_list.append(i) | |||

| return updated_list | |||

| def detected_number(strings): | |||

| gt = [x.split(':')[0] for x in strings] | |||

| percentage = 27 - gt.count('.') | |||

| return(str(percentage)) | |||

| def vote_number(strings,consensus_call): | |||

| gt = [x.split(':')[0] for x in strings] | |||

| gt = [x.replace('.','0/0') for x in gt] | |||

| gt = list(map(gt_uniform,[i for i in gt])) | |||

| vote_num = gt.count(consensus_call) | |||

| return(str(vote_num)) | |||

| def family_vote(strings,consensus_call): | |||

| gt = [x.split(':')[0] for x in strings] | |||

| gt = [x.replace('.','0/0') for x in gt] | |||

| gt = list(map(gt_uniform,[i for i in gt])) | |||

| mendelian = [':'.join(x.split(':')[1:4]) for x in strings] | |||

| indices = [i for i, x in enumerate(gt) if x == consensus_call] | |||

| matched_mendelian = itemgetter(*indices)(mendelian) | |||

| mendelian_num = matched_mendelian.count('1:1:1') | |||

| return(str(mendelian_num)) | |||

| def gt_uniform(strings): | |||

| uniformed_gt = '' | |||

| allele1 = strings.split('/')[0] | |||

| allele2 = strings.split('/')[1] | |||

| if int(allele1) > int(allele2): | |||

| uniformed_gt = allele2 + '/' + allele1 | |||

| else: | |||

| uniformed_gt = allele1 + '/' + allele2 | |||

| return uniformed_gt | |||

| def decide_by_rep(strings): | |||

| consensus_rep = '' | |||

| mendelian = [':'.join(x.split(':')[1:4]) for x in strings] | |||

| gt = [x.split(':')[0] for x in strings] | |||

| gt = [x.replace('.','0/0') for x in gt] | |||

| # modified gt turn 2/1 to 1/2 | |||

| gt = list(map(gt_uniform,[i for i in gt])) | |||

| # mendelian consistent? | |||

| mendelian_dict = Counter(mendelian) | |||

| highest_mendelian = mendelian_dict.most_common(1) | |||

| candidate_mendelian = highest_mendelian[0][0] | |||

| freq_mendelian = highest_mendelian[0][1] | |||

| if (candidate_mendelian == '1:1:1') and (freq_mendelian >= 2): | |||

| gt_num_dict = Counter(gt) | |||

| highest_gt = gt_num_dict.most_common(1) | |||

| candidate_gt = highest_gt[0][0] | |||

| freq_gt = highest_gt[0][1] | |||

| if (candidate_gt != '0/0') and (freq_gt >= 2): | |||

| consensus_rep = candidate_gt | |||

| elif (candidate_gt == '0/0') and (freq_gt >= 2): | |||

| consensus_rep = '0/0' | |||

| else: | |||

| consensus_rep = 'inconGT' | |||

| elif (candidate_mendelian == '') and (freq_mendelian >= 2): | |||

| consensus_rep = 'noInfo' | |||

| else: | |||

| consensus_rep = 'inconMen' | |||

| return consensus_rep | |||

| def main(): | |||

| for line in fileinput.input(multi_sample_vcf): | |||

| headline = re.match('^\#',line) | |||

| if headline is not None: | |||

| pass | |||

| else: | |||

| line = line.strip() | |||

| strings = line.split('\t') | |||

| variant_id = '_'.join([strings[0],strings[1]]) | |||

| # check if the variants location is duplicated | |||

| if variant_id in var_dup: | |||

| strings[7] = strings[7] + ';DUP' | |||

| outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(outLine) | |||

| else: | |||

| # pre-define | |||

| pcr_consensus = '.' | |||

| pcr_free_consensus = '.' | |||

| consensus_call = '.' | |||

| consensus_alt_seq = '.' | |||

| # pcr | |||

| strings[9:] = replace_nan(strings[9:]) | |||

| pcr = itemgetter(*[9,10,11,27,28,29,30,31,32,33,34,35])(strings) | |||

| SEQ2000 = decide_by_rep(pcr[0:3]) | |||

| XTen_ARD = decide_by_rep(pcr[3:6]) | |||

| XTen_NVG = decide_by_rep(pcr[6:9]) | |||

| XTen_WUX = decide_by_rep(pcr[9:12]) | |||

| sequence_site = [SEQ2000,XTen_ARD,XTen_NVG,XTen_WUX] | |||

| sequence_dict = Counter(sequence_site) | |||

| highest_sequence = sequence_dict.most_common(1) | |||

| candidate_sequence = highest_sequence[0][0] | |||

| freq_sequence = highest_sequence[0][1] | |||

| if freq_sequence > 2: | |||

| pcr_consensus = candidate_sequence | |||

| else: | |||

| pcr_consensus = 'inconSequenceSite' | |||

| # pcr-free | |||

| pcr_free = itemgetter(*[12,13,14,15,16,17,18,19,20,21,22,23,24,25,26])(strings) | |||

| T7_WGE = decide_by_rep(pcr_free[0:3]) | |||

| Nova_ARD_1 = decide_by_rep(pcr_free[3:6]) | |||

| Nova_ARD_2 = decide_by_rep(pcr_free[6:9]) | |||

| Nova_BRG = decide_by_rep(pcr_free[9:12]) | |||

| Nova_WUX = decide_by_rep(pcr_free[12:15]) | |||

| sequence_site = [T7_WGE,Nova_ARD_1,Nova_ARD_2,Nova_BRG,Nova_WUX] | |||

| highest_sequence = sequence_dict.most_common(1) | |||

| candidate_sequence = highest_sequence[0][0] | |||

| freq_sequence = highest_sequence[0][1] | |||

| if freq_sequence > 3: | |||

| pcr_free_consensus = candidate_sequence | |||

| else: | |||

| pcr_free_consensus = 'inconSequenceSite' | |||

| # pcr and pcr-free | |||

| tag = ['inconGT','noInfo','inconMen','inconSequenceSite'] | |||

| if (pcr_consensus == pcr_free_consensus) and (pcr_consensus not in tag) and (pcr_consensus != '0/0'): | |||

| consensus_call = pcr_consensus | |||

| VOTED = vote_number(strings[9:],consensus_call) | |||

| strings[7] = strings[7] + ';VOTED=' + VOTED | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| FAM = family_vote(strings[9:],consensus_call) | |||

| strings[7] = strings[7] + ';FAM=' + FAM | |||

| # Delete multiple alternative genotype to necessary expression | |||

| alt = strings[4] | |||

| alt_gt = alt.split(',') | |||

| if len(alt_gt) > 1: | |||

| allele1 = consensus_call.split('/')[0] | |||

| allele2 = consensus_call.split('/')[1] | |||

| if allele1 == '0': | |||

| allele2_seq = alt_gt[int(allele2) - 1] | |||

| consensus_alt_seq = allele2_seq | |||

| consensus_call = '0/1' | |||

| else: | |||

| allele1_seq = alt_gt[int(allele1) - 1] | |||

| allele2_seq = alt_gt[int(allele2) - 1] | |||

| if int(allele1) > int(allele2): | |||

| consensus_alt_seq = allele2_seq + ',' + allele1_seq | |||

| consensus_call = '1/2' | |||

| elif int(allele1) < int(allele2): | |||

| consensus_alt_seq = allele1_seq + ',' + allele2_seq | |||

| consensus_call = '1/2' | |||

| else: | |||

| consensus_alt_seq = allele1_seq | |||

| consensus_call = '1/1' | |||

| else: | |||

| consensus_alt_seq = alt | |||

| # GT:DP:ALT:AF:GQ:QD:MQ:FS:QUAL | |||

| # GT:TWINS:TRIO5:TRIO6:DP:ALT:AF:GQ:QD:MQ:FS:QUAL:rawGT | |||

| # DP | |||

| DP = [x.split(':')[4] for x in strings[9:]] | |||

| DP = remove_dot(DP) | |||

| DP = [int(x) for x in DP] | |||

| ALL_DP = sum(DP) | |||

| # AF | |||

| ALT = [x.split(':')[5] for x in strings[9:]] | |||

| ALT = remove_dot(ALT) | |||

| ALT = [int(x) for x in ALT] | |||

| ALL_ALT = sum(ALT) | |||

| ALL_AF = round(ALL_ALT/ALL_DP,2) | |||

| # GQ | |||

| GQ = [x.split(':')[7] for x in strings[9:]] | |||

| GQ = remove_dot(GQ) | |||

| GQ = [int(x) for x in GQ] | |||

| GQ_MEAN = round(mean(GQ),2) | |||

| # QD | |||

| QD = [x.split(':')[8] for x in strings[9:]] | |||

| QD = remove_dot(QD) | |||

| QD = [float(x) for x in QD] | |||

| QD_MEAN = round(mean(QD),2) | |||

| # MQ | |||

| MQ = [x.split(':')[9] for x in strings[9:]] | |||

| MQ = remove_dot(MQ) | |||

| MQ = [float(x) for x in MQ] | |||

| MQ_MEAN = round(mean(MQ),2) | |||

| # FS | |||

| FS = [x.split(':')[10] for x in strings[9:]] | |||

| FS = remove_dot(FS) | |||

| FS = [float(x) for x in FS] | |||

| FS_MEAN = round(mean(FS),2) | |||

| # QUAL | |||

| QUAL = [x.split(':')[11] for x in strings[9:]] | |||

| QUAL = remove_dot(QUAL) | |||

| QUAL = [float(x) for x in QUAL] | |||

| QUAL_MEAN = round(mean(QUAL),2) | |||

| # benchmark output | |||

| output_format = consensus_call + ':' + str(ALL_DP) + ':' + str(ALL_ALT) + ':' + str(ALL_AF) + ':' + str(GQ_MEAN) + ':' + str(QD_MEAN) + ':' + str(MQ_MEAN) + ':' + str(FS_MEAN) + ':' + str(QUAL_MEAN) | |||

| outLine = strings[0] + '\t' + strings[1] + '\t' + strings[2] + '\t' + strings[3] + '\t' + consensus_alt_seq + '\t' + '.' + '\t' + '.' + '\t' + strings[7] + '\t' + 'GT:DP:ALT:AF:GQ:QD:MQ:FS:QUAL' + '\t' + output_format + '\n' | |||

| benchmark_outfile.write(outLine) | |||

| # all sample output | |||

| strings[7] = strings[7] + ';ALL_ALT=' + str(ALL_ALT) + ';ALL_DP=' + str(ALL_DP) + ';ALL_AF=' + str(ALL_AF) \ | |||

| + ';GQ_MEAN=' + str(GQ_MEAN) + ';QD_MEAN=' + str(QD_MEAN) + ';MQ_MEAN=' + str(MQ_MEAN) + ';FS_MEAN=' + str(FS_MEAN) \ | |||

| + ';QUAL_MEAN=' + str(QUAL_MEAN) + ';PCR=' + consensus_call + ';PCR_FREE=' + consensus_call + ';CONSENSUS=' + consensus_call \ | |||

| + ';CONSENSUS_SEQ=' + consensus_alt_seq | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif (pcr_consensus in tag) and (pcr_free_consensus in tag): | |||

| consensus_call = 'filtered' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif ((pcr_consensus == '0/0') or (pcr_consensus in tag)) and ((pcr_free_consensus not in tag) and (pcr_free_consensus != '0/0')): | |||

| consensus_call = 'pcr-free-speicifc' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif ((pcr_consensus != '0/0') or (pcr_consensus not in tag)) and ((pcr_free_consensus in tag) and (pcr_free_consensus == '0/0')): | |||

| consensus_call = 'pcr-speicifc' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call + ';PCR=' + pcr_consensus + ';PCR_FREE=' + pcr_free_consensus | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| elif (pcr_consensus == '0/0') and (pcr_free_consensus == '0/0'): | |||

| consensus_call = 'confirm for parents' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| else: | |||

| consensus_call = 'filtered' | |||

| DETECTED = detected_number(strings[9:]) | |||

| strings[7] = strings[7] + ';DETECTED=' + DETECTED | |||

| strings[7] = strings[7] + ';CONSENSUS=' + consensus_call | |||

| all_sample_outLine = '\t'.join(strings) + '\n' | |||

| all_sample_outfile.write(all_sample_outLine) | |||

| if __name__ == '__main__': | |||

| main() | |||

+ 42

- 0

codescripts/high_voted_mendelian_bed.py

Прегледај датотеку

| @@ -0,0 +1,42 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| mut = pd.read_table(sys.argv[1]) | |||

| outFile = open(sys.argv[2],'w') | |||

| for row in mut.itertuples(): | |||

| #d5 | |||

| if ',' in row.V4: | |||

| alt = row.V4.split(',') | |||

| alt_len = [len(i) for i in alt] | |||

| alt_max = max(alt_len) | |||

| else: | |||

| alt_max = len(row.V4) | |||

| #d6 | |||

| alt = alt_max | |||

| ref = row.V3 | |||

| pos = int(row.V2) | |||

| if len(ref) == 1 and alt == 1: | |||

| StartPos = int(pos) -1 | |||

| EndPos = int(pos) | |||

| cate = 'SNV' | |||

| elif len(ref) > alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (len(ref) - 1) | |||

| cate = 'INDEL' | |||

| elif alt > len(ref): | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| elif len(ref) == alt: | |||

| StartPos = int(pos) - 1 | |||

| EndPos = int(pos) + (alt - 1) | |||

| cate = 'INDEL' | |||

| outline = row.V1 + '\t' + str(StartPos) + '\t' + str(EndPos) + '\t' + str(row.V2) + '\t' + cate + '\n' | |||

| outFile.write(outline) | |||

+ 50

- 0

codescripts/how_many_samples.py

Прегледај датотеку

| @@ -0,0 +1,50 @@ | |||

| import pandas as pd | |||

| import sys, argparse, os | |||

| from operator import itemgetter | |||

| parser = argparse.ArgumentParser(description="This script is to get how many samples") | |||

| parser.add_argument('-sample', '--sample', type=str, help='quartet_sample', required=True) | |||

| parser.add_argument('-rep', '--rep', type=str, help='quartet_rep', required=True) | |||

| args = parser.parse_args() | |||

| # Rename input: | |||

| sample = args.sample | |||

| rep = args.rep | |||

| quartet_sample = pd.read_table(sample,header=None) | |||

| quartet_sample = list(quartet_sample[0]) | |||

| quartet_rep = pd.read_table(rep.header=None) | |||

| quartet_rep = quartet_rep[0] | |||

| #tags | |||

| sister_tag = 'false' | |||

| quartet_tag = 'false' | |||

| quartet_rep_unique = list(set(quartet_rep)) | |||

| single_rep = [i for i in range(len(quartet_rep)) if quartet_rep[i] == quartet_rep_unique[0]] | |||

| single_batch_sample = itemgetter(*single_rep)(quartet_sample) | |||

| num = len(single_batch_sample) | |||

| if num == 1: | |||

| sister_tag = 'false' | |||

| quartet_tag = 'false' | |||

| elif num == 2: | |||

| if set(single_batch_sample) == set(['LCL5','LCL6']): | |||

| sister_tag = 'true' | |||

| quartet_tag = 'false' | |||

| elif num == 3: | |||

| if ('LCL5' in single_batch_sample) and ('LCL6' in single_batch_sample): | |||

| sister_tag = 'true' | |||

| quartet_tag = 'false' | |||

| elif num == 4: | |||

| if set(single_batch_sample) == set(['LCL5','LCL6','LCL7','LCL8']): | |||

| sister_tag = 'false' | |||

| quartet_tag = 'true' | |||

| sister_outfile = open('sister_tag','w') | |||

| quartet_outfile = open('quartet_tag','w') | |||

| sister_outfile.write(sister_tag) | |||

| quartet_outfile.write(quartet_tag) | |||

+ 42

- 0

codescripts/lcl5_all_called_variants.py

Прегледај датотеку

| @@ -0,0 +1,42 @@ | |||

| from __future__ import division | |||

| import sys, argparse, os | |||

| import pandas as pd | |||

| from collections import Counter | |||

| # input arguments | |||

| parser = argparse.ArgumentParser(description="this script is to merge mendelian and vcfinfo, and extract high_confidence_calls") | |||

| parser.add_argument('-vcf', '--vcf', type=str, help='merged multiple sample vcf', required=True) | |||

| args = parser.parse_args() | |||

| vcf = args.vcf | |||

| lcl5_outfile = open('LCL5_all_variants.txt','w') | |||

| filtered_outfile = open('LCL5_filtered_variants.txt','w') | |||

| vcf_dat = pd.read_table(vcf) | |||

| for row in vcf_dat.itertuples(): | |||

| lcl5_list = [row.Quartet_DNA_BGI_SEQ2000_BGI_LCL5_1_20180518,row.Quartet_DNA_BGI_SEQ2000_BGI_LCL5_2_20180530,row.Quartet_DNA_BGI_SEQ2000_BGI_LCL5_3_20180530, \ | |||

| row.Quartet_DNA_BGI_T7_WGE_LCL5_1_20191105,row.Quartet_DNA_BGI_T7_WGE_LCL5_2_20191105,row.Quartet_DNA_BGI_T7_WGE_LCL5_3_20191105, \ | |||

| row.Quartet_DNA_ILM_Nova_ARD_LCL5_1_20181108,row.Quartet_DNA_ILM_Nova_ARD_LCL5_2_20181108,row.Quartet_DNA_ILM_Nova_ARD_LCL5_3_20181108, \ | |||

| row.Quartet_DNA_ILM_Nova_ARD_LCL5_4_20190111,row.Quartet_DNA_ILM_Nova_ARD_LCL5_5_20190111,row.Quartet_DNA_ILM_Nova_ARD_LCL5_6_20190111, \ | |||

| row.Quartet_DNA_ILM_Nova_BRG_LCL5_1_20180930,row.Quartet_DNA_ILM_Nova_BRG_LCL5_2_20180930,row.Quartet_DNA_ILM_Nova_BRG_LCL5_3_20180930, \ | |||

| row.Quartet_DNA_ILM_Nova_WUX_LCL5_1_20190917,row.Quartet_DNA_ILM_Nova_WUX_LCL5_2_20190917,row.Quartet_DNA_ILM_Nova_WUX_LCL5_3_20190917, \ | |||

| row.Quartet_DNA_ILM_XTen_ARD_LCL5_1_20170403,row.Quartet_DNA_ILM_XTen_ARD_LCL5_2_20170403,row.Quartet_DNA_ILM_XTen_ARD_LCL5_3_20170403, \ | |||

| row.Quartet_DNA_ILM_XTen_NVG_LCL5_1_20170329,row.Quartet_DNA_ILM_XTen_NVG_LCL5_2_20170329,row.Quartet_DNA_ILM_XTen_NVG_LCL5_3_20170329, \ | |||

| row.Quartet_DNA_ILM_XTen_WUX_LCL5_1_20170216,row.Quartet_DNA_ILM_XTen_WUX_LCL5_2_20170216,row.Quartet_DNA_ILM_XTen_WUX_LCL5_3_20170216] | |||

| lcl5_vcf_gt = [x.split(':')[0] for x in lcl5_list] | |||

| lcl5_gt=[item.replace('./.', '0/0') for item in lcl5_vcf_gt] | |||

| gt_dict = Counter(lcl5_gt) | |||

| highest_gt = gt_dict.most_common(1) | |||

| candidate_gt = highest_gt[0][0] | |||

| freq_gt = highest_gt[0][1] | |||

| output = row._1 + '\t' + str(row.POS) + '\t' + '\t'.join(lcl5_gt) + '\n' | |||

| if (candidate_gt == '0/0') and (freq_gt == 27): | |||

| filtered_outfile.write(output) | |||

| else: | |||

| lcl5_outfile.write(output) | |||

+ 6

- 0

codescripts/linux_command.sh

Прегледај датотеку

| @@ -0,0 +1,6 @@ | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$7"\t.\t.\t.\tGT\t"$6}' | grep -v '2_y' > LCL5.body | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$15"\t.\t.\t.\tGT\t"$14}' | grep -v '2_y' > LCL6.body | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$23"\t.\t.\t.\tGT\t"$22}' | grep -v '2_y' > LCL7.body | |||

| cat benchmark.men.vote.diffbed.filtered | awk '{print $1"\t"$2"\t"".""\t"$35"\t"$31"\t.\t.\t.\tGT\t"$30}' | grep -v '2_y' > LCL8.body | |||